By Neil Perkins

And so the REF 2014 results are upon us. If you listen closely you can hear academics all around the country trying desperately to find the method of expressing the results that most favours their own Institution (or downplays bitter rivals). Of course, this is a technique commonly used in publishing research articles so there is a lot of expertise in this area.

Anyway, however you wrangle the figures in ICaMB we think we’ve done pretty well (whisper it quietly, possibly better that we expected when our return was submitted).

Most ICaMB scientists went into UoA5 Biological Sciences, although a number of us also were included in the UoA1 (Clinical Medicine) and UoA3 (Allied Health Professions, Dentistry, Nursing and Pharmacy) submissions. In fact UoA5 contained only ICaMB members and was written and submitted by ICaMB members. So this is the right place to say congratulations to ICaMBs Professor Brian Morgan who masterminded, with the help of Amanda Temby, our UoA5 submission. We hope Brian has recovered from the ordeal by now.

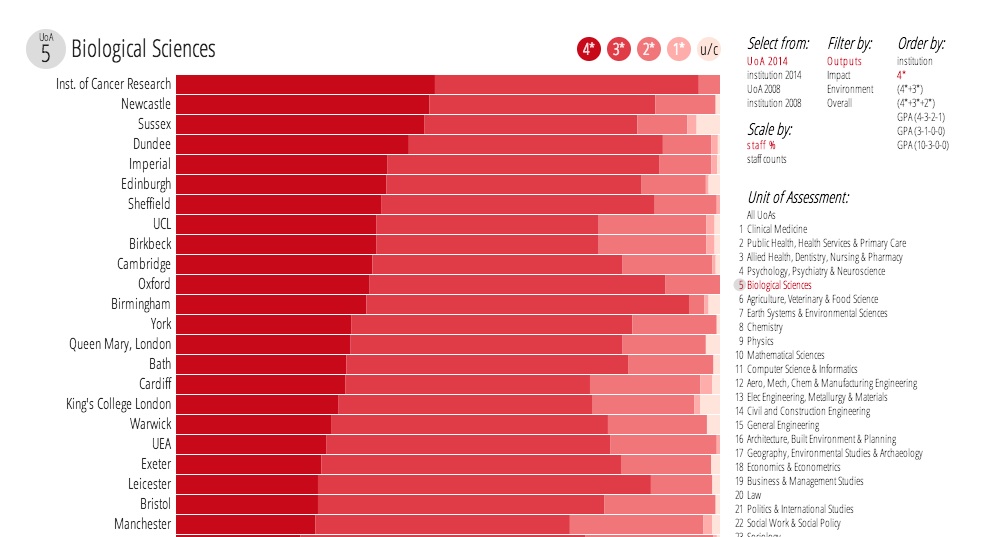

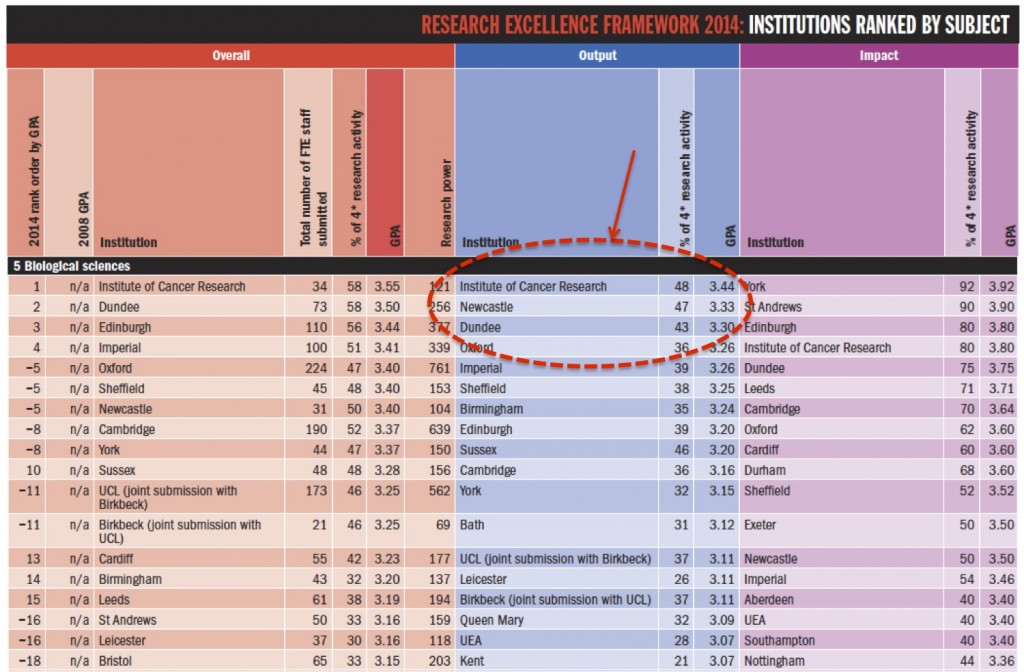

So how did we do? If we go by the Times Higher Education table then Newcastle (i.e. ICaMB) came joint 5th overall. However, in the clearly much more important ‘Output’ table we come 2nd in the country!! I suspect that’s the one that will end up on the front page of our website. Our ‘Impact’ submissions dragged us down a bit. I remember the meetings where we struggled with the tight definition used for ‘Impact’, something not easy for an Institute that really focuses on fundamental science. We work on important and relevant subjects but the impact of this on medicine or biotechnology is often a few steps removed.

The Time Higher Education raking for Uo5, Biological Sciences. The most important section (cough) is highlighted.

It would be remiss of me not to point out that our sister UoA submissions in Newcastle also did well

UoA1 Clinical Medicine) came 9th out of 31, UoA3 (Allied Health Professions, Dentistry, Nursing and Pharmacy) was 15th out of 94 while Uo4 (Psychology, Psychiatry and Neuroscience was 9th out of 82.

Lies, damned lies and…..

I like this viewer put together by City University London

http://www.staff.city.ac.uk/~jwo/refviewer/

And if I tweak the parameters in just the right way……

……. Hurrah! Second again!

A big BUT

OK, if we were to be slightly self-critical it could be noted that our Uo5 submission had, relative to many Institutions, a relatively low number of staff associated with it, although this is the substantial majority of the people in ICaMB. It was very much the ICaMB submission, with many others in the Faculty of which we are a part, going into UoA1, Clinical Medicine.

However, this is also an exercise in who decodes the rules most successfully (and there was head scratching at times over ambiguities and what it really meant). So what better time, after having done well, so it cannot be said to be sour grapes, to repeat that the REF really is a bad way to go about assessing the relative research strengths of UK universities. The arguments for why this is the case have been aired before in detail (also here) and I will not go over them all again here. I think that every academic I speak with agrees with this. People involved with this work phenomenally hard at all levels in the university but it has to be said that it is a colossal, time consuming juggernaut of dubious worth. Speaking to colleagues who were members of REF panels, I was horrified at just how many papers they were expected to read. You do not need to go that far outside my area of expertise before my judgement becomes quite superficial. Quite how anyone thinks this process leads to an unequivocal assessment of research quality is beyond me. However, as an entire industry seems to have grown up around the REF, incentives for change are few.

So what could replace it? Well as academics we are judged and assessed continually as part of or our normal jobs. Our grant applications are rigorously reviewed. Our papers are refereed in detail. There are citation indices and download statistics showing if these are actually being read. While individual applications or submissions are subject to some randomness, over an Institution, over time, these are the measures that really assess how well we are doing. The information for these is already out there and would be relatively quick to compile.

Of course there are caveats to this. Different disciplines receive different levels of funding or are cited lightly relative to others. But it should not be beyond the wit of the academic community to come up with different weightings for different subject areas. Wouldn’t it be refreshing if someone at the top came out and said, ‘never again, there has to be a better way of doing this’. However, I suspect this might be wishful thinking. I’ll just fearfully wait for the email saying “Neil, about REF 2020, Brian did a great job last time and we’d really like it if you could…..”

The opinions expressed in this article are those of the author and do not reflect those of Newcastle University