Chris Harrison, as an astronomer who is a Newcastle University Academic Track Fellow (NUAct). Here he reflects on the good and bad aspects of reproducible science in observational astronomy and describes how he is using Newcastle’s Research Repository to set a good example. We are keen to hear from colleagues across the research landscape so please do get in touch if you’d like to write a post.

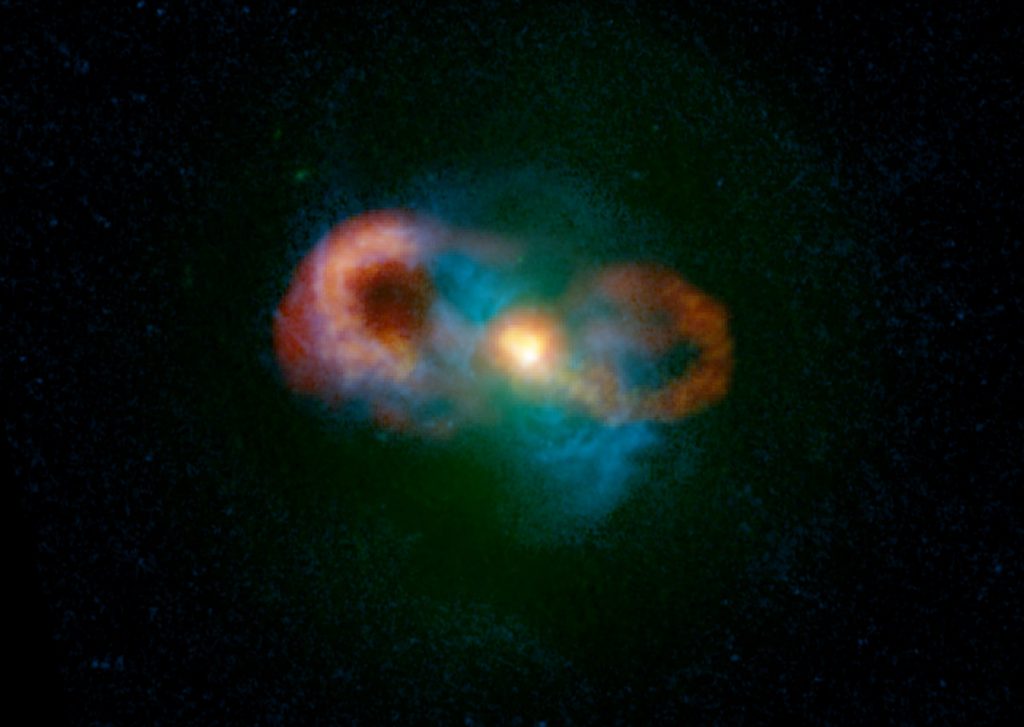

I use telescopes on the ground and in space to study galaxies and the supermassive black holes that lurk at their centres. These observations result in gigabytes to terabytes of data being collected for each project. In particular, when using interferometers such the Very Large Array (VLA) or the Atacama Large Millimetre Array, (ALMA) the raw data can be 100s of gigabytes from just one night of observations. These raw data products are then processed to produce two dimensional images, one dimensional spectra or three dimensional data cubes which are used to perform the scientific analyses. Although I mostly collect my own data, every so often I have felt compelled to write a paper from which I wanted to reproduce the results from other people’s observational data and their analyses. This has been in situations where the results were quite sensational and appeared to contradict previous results or conflict with my expectations from my understanding of theoretical predictions. As I write this, I have another paper under review that directly challenges previous work. This has been after a year of struggling to reproduce the previous results! Why has this been and what can we do better?

On the one hand most astronomical observations have incredible archives where all raw data products ever taken can be accessed by anyone after the, typically 1 year long, proprietary period has expired (great archive examples are ALMA and the VLA). These always include comprehensive meta-data and is always provided in standard formats so that it can be accessed and processed by anyone with a variety of open access software. However, from painful experience, I can tell you that it is still extremely challenging to reproduce other people’s results based on astronomical observational data. This is due to the many complex steps that are taken to go from the raw data products to a scientific result. Indeed, these are so complex it is basically not possible to adequately describe all steps in a publication. The only real solution for completely reproducible science would be to publicly release processed data products and the codes that were used both to reproduce these and analyse them. Indeed, I have even requested such products and codes from authors and found that they have been destroyed forever on broken hard drives. As early-career researchers work in a competitive environment and have vulnerable careers, one cannot blame them for wanting to keep their hard work to themselves (potentially for follow-up papers) and to not expose themselves to criticism. Discussing the many disappointing reasons why early career research are so vulnerable – and how this damages scientific progress – is too much to discuss here. However, as I now in an academic track position, I feel more confident to set a good example and hopefully encourage other more senior academics to do the same.

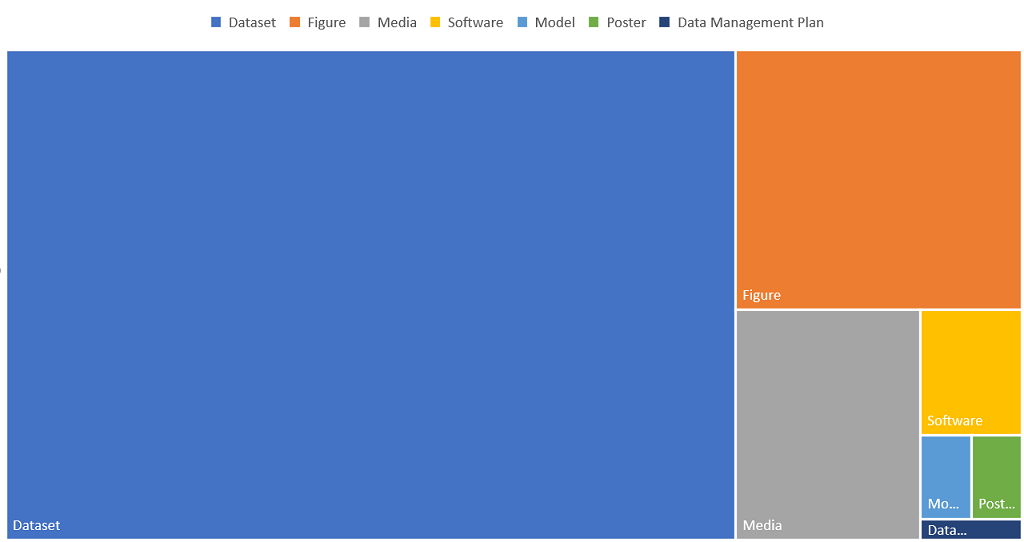

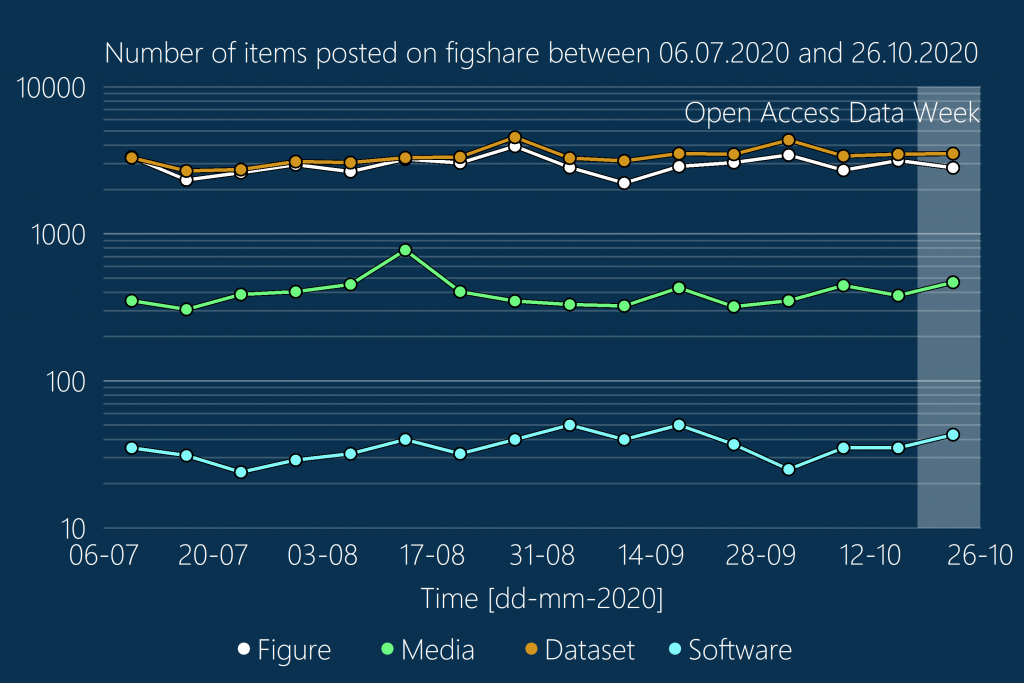

In March 2021 I launched the “Quasar Feedback Survey”, which is a comprehensive observational survey of 42 galaxies hosting rapidly growing black holes. We will be studying these galaxies with an array of telescopes. With the launch of this survey, I uploaded 45 gigabytes of processed data products to data.ncl (Newcastle’s Research Repository), including historic data from pilot projects that lead to this wider survey. All information about data products and results can also easily be accessed via a dedicated website. I already know these galaxies, and hence data, are of interest to other astronomers and our data products are being used right now to help design new observational experiments. As the survey continues the data products will continue to be uploaded alongside the relevant publications. The next important step for me is to find a way to also share the codes, whilst protecting the career development of the early career researchers that produced the codes.

To be continued!

Image Credit: C. Harrison, A. Thomson; Bill Saxton, NRAO/AUI/NSF; NASA.