I received an overwhelming response to the introductory blog post about the algebra of graphs; thank you all for your remarks, questions and suggestions! In the second part of the series I will show that the algebra is not restricted only to directed graphs, but can be extended to axiomatically represent undirected graphs, reachability and dependency graphs (i.e. preorders and partial orders), their various combinations, and even hypergraphs.

Update: This series of blog posts was published as a functional pearl at the Haskell Symposium 2017.

Why algebra?

Before we continue, I’d like to note that any data structure for representing graphs (e.g. an edgelist, matrix-based representations, inductive graphs from the fgl library, GHC’s standard Data.Graph, etc.) can satisfy the axioms of the algebra with appropriate definitions of empty, vertex, overlay and connect, and I do not intend to compare these implementations against each other. I’m more interested in implementation-independent (polymorphic) functions that we can write and reuse, and in proving properties of these functions using the laws of the algebra. That’s why I think the algebra is worth studying.

As a warm-up exercise, let’s look at a few more examples of such polymorphic graph functions. One of the threads in the reddit discussion was about the path graph P4: i.e. the graph with 4 vertices connected in a chain. Here is a function that can construct such path graphs on a given list of vertices:

path :: Graph g => [Vertex g] -> g path [] = empty path [x] = vertex x path xs = fromEdgeList $ zip xs (tail xs) p4 :: (Graph g, Vertex g ~ Char) => g p4 = path ['a', 'b', 'c', 'd']

Note that graph p4 is also polymorphic: we haven’t committed to any particular data representation, but we know that the vertices of p4 have type Char.

If we connect the last vertex of a path to the first one, we get a circuit graph, or a cycle. Let’s express this in terms of the path function:

circuit :: Graph g => [Vertex g] -> g circuit [] = empty circuit (x:xs) = path $ [x] ++ xs ++ [x] pentagon :: (Graph g, Vertex g ~ Int) => g pentagon = circuit [1..5]

From the definition we expect that a path is a subgraph of the corresponding circuit. Can we express this property in the algebra? Yes! It’s fairly standard to define a ≤ b as a + b = b for idempotent + and it turns out that this definition corresponds to the subgraph relation on graphs:

isSubgraphOf :: (Graph g, Eq g) => g -> g -> Bool isSubgraphOf x y = overlay x y == y

We can use QuickCheck to test that our implementation satisfies the property:

λ> quickCheck $ \xs -> path xs `isSubgraphOf` (circuit xs :: Basic Int) +++ OK, passed 100 tests.

QuickCheck can only test the property w.r.t. a particular instance, in this case we chose Basic Int, but using the algebra we can prove that it holds for all law-abiding instances of Graph (I leave this as an exercise for the reader).

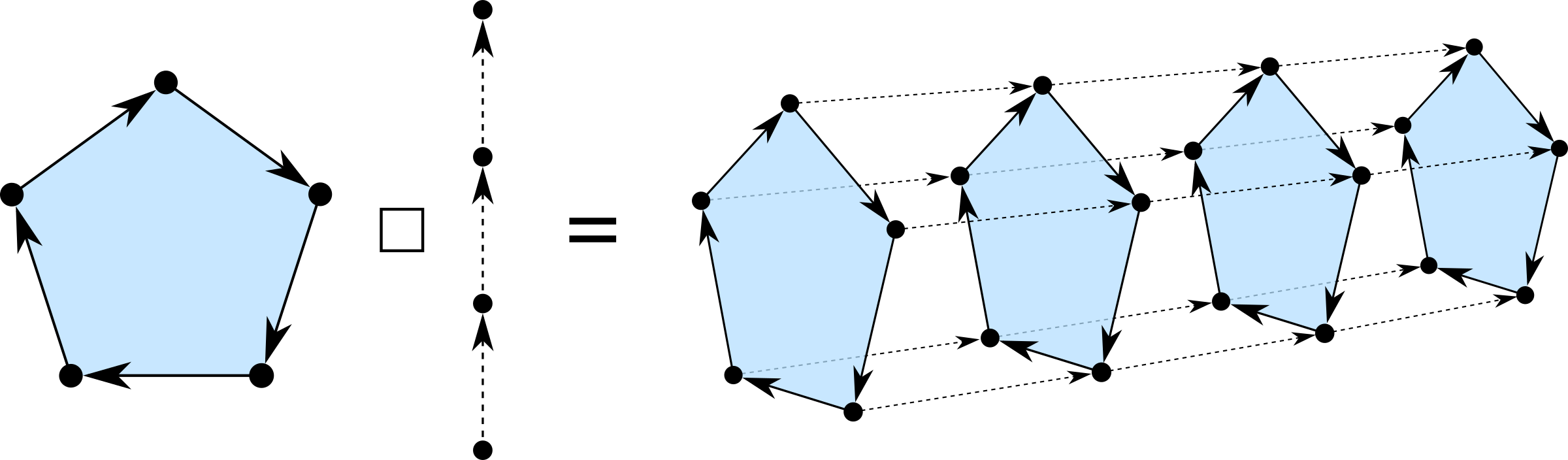

As a final example, we will implement Cartesian graph product, usually denoted as G

box :: (Functor f, Foldable f, Graph (f (a,b))) => f a -> f b -> f (a,b) box x y = foldr overlay empty $ xs ++ ys where xs = map (\b -> fmap (,b) x) $ toList y ys = map (\a -> fmap (a,) y) $ toList x

The Cartesian product G

toList from the Foldable instance and turn vertices of original graphs into pairs of vertices by fmap from the Functor instance.

As you can see, the code is still implementation-independent, although it requires that the graph data type is a Functor and a Foldable. Just like lists, trees and other containers, most graph data structures have Functor, Foldable, Applicative and Monad instances (e.g. our Basic data type has them all). Here is how pentagon `box` p4 looks:

(A side note: the type signature of box reminds me of this blog post by Edward Yang and makes me wonder if Functor, Foldable plus idempotent and commutative Monoid together imply Monoidal, as it seems that I only had to use empty and overlay from the Graph type class. This seems odd.)

Undirected graphs

As I hinted in the previous blog post, to switch from directed to undirected graphs it is sufficient to add the axiom of commutativity for the connect operator. For undirected graphs it makes sense to denote connect by ↔ or —, hence:

- x ↔ y = y ↔ x.

Curiously, with the introduction of this axiom the associativity of ↔ follows from the (left-associated version of) decomposition axiom and commutativity of +:

(x ↔ y) ↔ z |

= | x ↔ y + x ↔ z + y ↔ z | (left decomposition) |

| = | y ↔ z + y ↔ x + z ↔ x | (commutativity of + and ↔) | |

| = | (y ↔ z) ↔ x | (left decomposition) | |

| = | x ↔ (y ↔ z) | (commutativity of ↔) |

Commutativity of the connect operator forces graph expressions that differ only in the direction of edges into the same equivalence class. One can implement this by the symmetric closure of the underlying connectivity relation:

newtype Undirected a = Undirected { fromUndirected :: Basic a } deriving (Arbitrary, Functor, Foldable, Num, Show) instance Ord a => Eq (Undirected a) where x == y = toSymmetric x == toSymmetric y toSymmetric :: Ord a => Undirected a -> Relation a toSymmetric = symmetricClosure . toRelation . fromUndirected

As you can see, we simply wrap our Basic implementaion in a newtype with a custom Eq instance that takes care of the commutativity of ↔. We know that the resulting Undirected datatype satisfies all Graph laws, because we only made some previously different expressions equal but not vice versa.

Partial orders

In many applications graphs satisfy the transitivity property: if vertex x is connected to y, and y is connected to z, then the edge between x and z can be added or removed without changing the semantics of the graph. A common example is dependency graphs. The semantics of such graphs is typically a partial order on the set of vertices. To describe this class of graphs algebraically we can add the following closure axiom:

- y ≠ ε ⇒ x → y + y → z + x → z = x → y + y → z

By using the axiom one can always rewrite a graph expression into its transitive closure or, alternatively, into its transitive reduction, hence all graphs that differ only in the existence of some transitive edges are forced into the same equivalence class. Note: the precondition (y ≠ ε) is necessary as otherwise + and → can no longer be distinguished:

x → z = x → ε → z = x → ε + ε → z + x → z = x → ε + ε → z = x + z

It is interesting that + and → have a simple meaning for partial orders: they correspond to parallel and sequential composition of partial orders, respectively. This allows one to algebraically describe concurrent systems — I will dedicate a separate blog post to this topic.

We can implement a PartialOrder instance by wrapping Basic in a newtype and providing a custom equality test via the transitive closure of the underlying relation, just like we did for undirected graphs:

newtype PartialOrder a = PartialOrder { fromPartialOrder :: Basic a } deriving (Arbitrary, Functor, Foldable, Num, Show) instance Ord a => Eq (PartialOrder a) where x == y = toTransitive x == toTransitive y toTransitive :: Ord a => PartialOrder a -> Relation a toTransitive = transitiveClosure . toRelation . fromPartialOrder

Let’s test that our implementation correctly recognises the fact that path graphs are equivalent to cliques when interpreted as partial orders:

λ> quickCheck $ \xs -> path xs == (clique xs :: PartialOrder Int) +++ OK, passed 100 tests.

Indeed, if we have a series of n tasks, where each task (apart from task 1) depends on the previous task (as expressed by path [1..n]), then task 1 is transitively a prerequisite for all subsequent tasks, task 2 is a prerequisite for tasks [3..n] etc., which can be expressed by clique [1..n].

Reflexive graphs

A partial order is reflexive (also called weak) if every element is related to itself. An example of a reflexive partial order is isSubgraphOf as introduced above: indeed, x `isSubgraphOf` x == True for all graphs x. To represent reflexive graphs algebraically we can introduce the following axiom:

- vertex x = vertex x → vertex x

This enforces that each vertex has a self-loop. The implementation of Reflexive data type is very similar to that of Undirected and PartialOrder so I do not show it here (it is based on the reflexive closure of the underlying relation).

Note: cyclic reflexive partial orders correspond to preorders, for example:

(1 + 2 + 3) → (2 + 3 + 4)

is a preorder with vertices 2 and 3 forming an equivalence class. We can find the strongly-connected components and derive the following condensation:

{1} → {2, 3} → {4}

One way to interpret this preorder as a dependency graph is that tasks 2 and 3 are executed as a step, simultaneously, and that they both depend on task 1.

Mixing graph flavours

We can mix the three new axioms above in various combinations. For example, the algebra of undirected, reflexive and transitively closed graphs describes the laws of equivalence relations. Notably, it is not necessary to keep information about all edges in such graphs and there is an efficient implementation based on the disjoint set data structure. If you are curious about potential applications of such graphs, have a look at this paper where I use them to model switching networks. More precisely, I model families of switching networks; this requires another extension to the algebra: a unary condition operator, which I will cover in a future blog post.

Hypergraphs

This thread in the Hacker News discussion leads me another twist of the algebra. Let’s replace the decomposition axiom with 3-decomposition:

- w → x → y → z = w → x → y + w → x → z + w → y → z + x → y → z

In words, instead of collapsing all expressions to vertices and edges (pairs of vertices), as we did with the 2-decomposition, we now collapse all expressions to vertices, edges and triples (or hyperedges of rank 3). I haven’t yet figured out whether the resulting algebra is particularly useful, but perhaps the reader can provide an insight?

To see the difference between 2- and 3-decomposition clearer, let’s substitute ε for w in 3-decomposition and simplify:

x → y → z = x → y + x → z + y → z + x → y → z

Looks familiar? It’s almost the 2-decomposition axiom! Yet there is no way to get rid of the term x → y → z on the right side: indeed, a triple is unbreakable in this algebra, and one can only extract the pairs (edges) that are embedded in it. In fact, we can take this further and rewrite the above expression to also expose the embedded vertices:

x → y → z = x + y + z + x → y + x → z + y → z + x → y → z

With 2-decomposition we can achieve something similar:

x → y = x + y + x → y

which I call the absorption theorem. It says that an edge x → y has vertices x and y (its endpoints) embedded in it. This seems intriguing but I have no idea where it leads to, I guess we’ll figure out together!

P.S.: All code snippets above are available in the alga repository. Look how nicely we can test the library thanks to the algebraic API!

At risk of being trivial and/or unuseful, this last decomposition looks like a canonical lifting to a higher dimension. We see x as a global point, that is as an arrow from 1 (the terminal object) to U, where U contains, say x and y. Then one can see the arrow itself from x to y as a natural transformation between such lifted elements. (I imagine you have checked coherent spaces for the rest it sounds like it has some overlap)

Thank you for an interesting comment! I have to admit though that I don’t fully understand it, because I know just a few most basic constructs in category theory. Could you please clarify?

For example, a natural transformation is defined between two functors, but what are they in this example? I can see one functor, which takes x to y, and arrow 1 -> x to arrow 1 -> y. What is the second functor?

I haven’t looked into coherent spaces, because that’s the first time I see this term, so I’ll need to do some reading about it.

So, as you can see your comment is far from being trivial, at least for me! 🙂