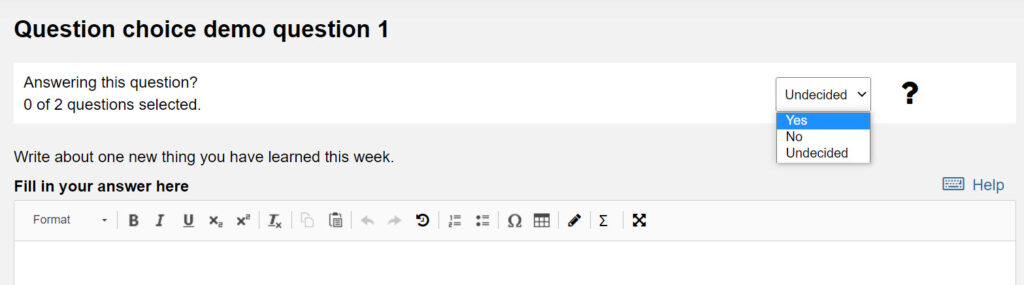

Digital Exam Support Assistants (DESAs) are PGR students who support invigilators in digital exam venues to help students troubleshoot any technical issues using the safe exam browser software. Safe Exam Browser is software which works alongside Inspera offering a secure ‘locked down’ digital exam. Inspera Assessment is the University’s Digital Exam system used for present-in-person, secure online assessments.

How do DESAs support exam invigilators in digital exams?

DESAs are on-hand to support students and invigilators to troubleshoot issues faced when accessing Inspera for Bring Your Own Device (BYOD) exams. Exam invigilators have reported that the presence of DESAs makes them feel more confident in digital exam venues. Feedback has stated that DESAs have been a ‘confidence booster’ and that invigilators ‘couldn’t do it without them’. Invigilators reported that the DESAs were responding to queries quickly which has also been stated by students who had DESA support.

How do students find the DESA support?

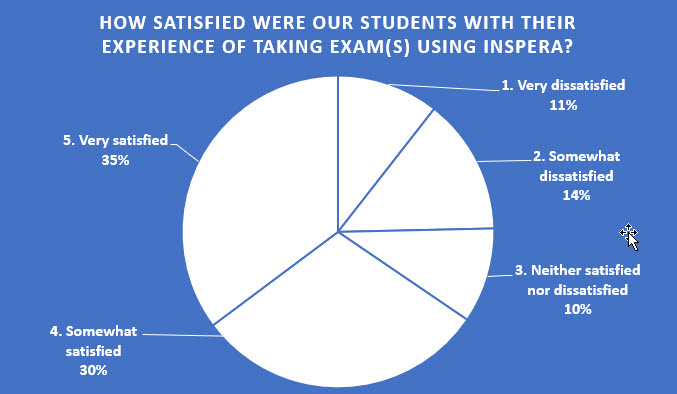

39 students submitted their feedback on their Semester 1 22/23 BYOD exam. When asked how satisfied they were with the technical support available in their exam, two thirds of students (67%) reported that they were satisfied or very satisfied.

Students reported that ‘those who requested support were dealt with quickly and there was little hassle.’

How did the DESAs find their experience?

We asked some of our DESAs how they found their experience in the role this year. Check out some of the quotes below:

“I had a wonderful experience with the team. Enough training was given to staff. Would like to work with the team again. Thanks for giving me the opportunity.”

“Regarding my experience in the DESA role this academic year, it provided me with a valuable opportunity to contribute to the Digital Assessment Office and engage with fellow students. The role not only enhanced my understanding of digital assessment practices but also allowed me to develop essential skills in communication and collaboration. I am grateful for the experience and the chance to be a part of improving the assessment process at Newcastle University.”

What’s next?

We are pleased to report that the DESA role will be returning in the 2023/24 academic year. This support provision has been crucial in supporting our students with any troubleshooting during their BYOD digital exams. For more information you can email the Digital Assessment Team.

You can find out more about Inspera in our other blog posts on Inspera and on our Inspera Digital Exams webpage.