As part of the geospatial engineering team’s on-going involvement in the ITRC project, researcher David Alderson was accompanied by newly-recruited Computing Science PhD student Mr Razgar Ebrahimy to attend a workshop kindly organised by Margot Weijnen, at TU Delft entitled “Modelling change and adaptation in infrastructure systems: state-of-the-art modelling and simulation approaches”. The workshop aimed to bring together researchers and academics from the Next Generation Infrastructures (NGI) team at TU Delft, representatives from across work streams 1 and 2 from ITRC (), and also welcomed the visit of Research Director of the SMART Infrastructure Facility at the University of Wollongong, Australia, Professor Pascal Perez, with the aim of sharing experiences, projects and outputs of each research team to help further build and maintain the research links and community developing between these three groups.

The morning session of the workshop consisted of a series of short presentations, each delivered by a representative of one of the afore-mentioned groups, with a view to then discussing some of the topics and concepts raised during the post-presentation discussion session. Initially we heard from Professor Paulien Herder about how research in to the current and possible future states of infrastructure systems should be considered as a combination of understanding both the traditional physical, technical components thought of when infrastructure comes to mind e.g. power stations, roads, water treatment works, but also the social actors that operate, maintain, build and ultimately consume services offered by infrastructure. Many studies of infrastructure systems tend to focus on the technical aspects of the systems, which are clearly of paramount importance to being able to deliver the levels of service society has become to expect when interfacing with infrastructure, but perhaps do not consider enough the impacts that “people” have on the performance and evolution of infrastructure over time.

The audience subsequently heard from Pascal Perez about the great work being undertaken at the University of Wollongong, Australia as part of the SMART Infrastructure Research Facility. A key aspect of the outcomes from Pascal’s presentation, was again the need to think about how the social actors play a role in the infrastructure “complex” system. This was of particular focus when thinking about the economic benefits of infrastructure service provision, and the conundrum as to whether it is society that drives the economic growth/decline in the first instance leading to either increased/decreased demand for infrastructure services, or whether economic growth/decline leads to changes in societal make-up and thus acts as the stimulus or suppressant for infrastructure demand and supply. The “chicken-and-egg” discussion point of whether it is the economy driving society or vice versa was of particular interest to Professor Peter Tyler (ITRC), Ed Oughton (ITRC) and Robert Carlsson (ITRC) who are interested in understanding the interactions between infrastructure and the economy, whether at a national, or regional level.

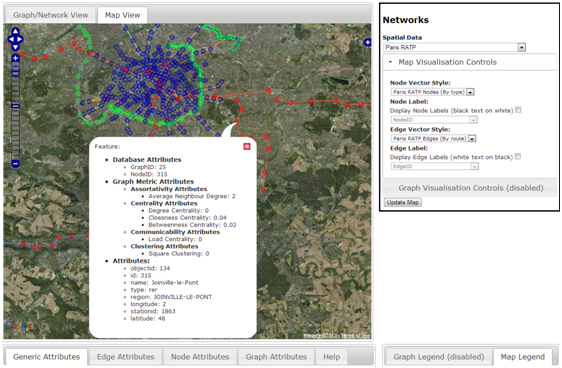

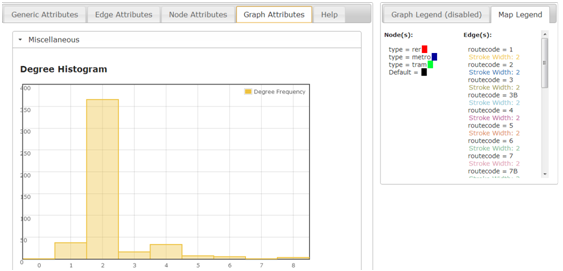

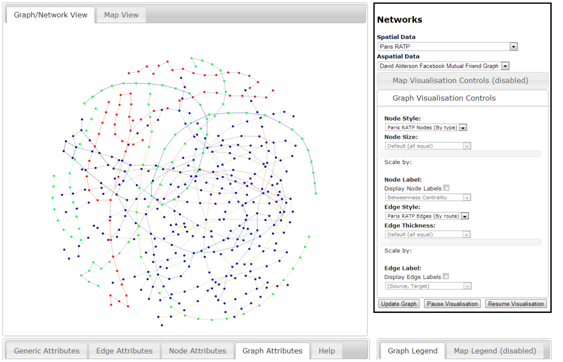

From a more technical perspective, the audience learnt about the excellent work being undertaken at SMART with respect to their development of a regional, SMART Infrastructure Dashboard, helping to enable infrastructure decision makers gain access to a plethora of infrastructure-related information via a tablet/mobile-compatible interface. The technical components, but also the design process through which this dashboard has been developed is of particular interest to work stream 1, 2 and 4, as it is the intention that something equivalent be prototyped and developed to potentially enable UK-based policy makers and planners, as well as scientists and researchers, to ability to access outputs from the various capacity and demand modelling activities from work stream 1, as well as looking at the possible infrastructure failures as part of work stream 2.

A mixture of further presentations by Pieter Bots, Igor Mayer and Igor Nikolic focussed more on the ways in which infrastructure systems and complex systems can be modelled and evaluated. In particular Pieter, thankfully, raised the point of ensuring that modelling, and the subsequent dissemination of results of those models, be tailored to suit the problem or challenge to be solved, but also tailored to the audience to which the results are being presented. A “one-size-fits-all” approach to dissemination is not an appropriate solution as the types of questions to be asked of complex systems by different audiences, may require different tools, techniques and visualisations suited to each audience. This ideal fits well with the approaches being thought about within ITRC, where tools developed will need to be adjusted to suit the needs of those using them. For example a three tier approach could be conceived to determine the functionality of any interface to infrastructure modelling data, whereby the highest tier offers access to information to a wider audience but allows decreasing levels of functionality and therefore reduces the complexity of questions that can be asked, against the lowest tier offering potentially more analytical capabilities but to, for example, only researchers within the relevant fields.

However, Dr Mayer’s presentation and discussion focussed on the potential application of gaming, or correctly termed, “serious gaming” to help evaluate the interactions between the social and technical aspects of the complex infrastructure system. For further information on some of the evaluation work, and other projects undertaken by Dr Mayer and his team, can be found here. It was particularly interesting to here how this approach allows the individual stakeholder to actually be immersed in the model or environment itself, and how their interaction or reaction to particular events or shocks can be evaluated as examples of how user’s interact with complex infrastructure systems outside the test environment. Dr Mayer raised a point heard previously when considering the use of games to evaluate socio-technical systems and that is to ensure that the appropriate level of abstraction from reality is applied such that a user does not become too disconnected from reality and as such does not interact in a manner as close to mimicking interaction with the “real” system as possible. This abstraction is important at both the functional level in terms of what functions any model is representing, but also in terms of the physical representation of real world features in a computer environment, whether that is in terms of selecting appropriate temporal and spatial scales across which to model a system. One drawback however, highlighted as part of the discussion sessions during the course of the day, was that the use of serious gaming to evaluate interaction can really only be performed a handful of times due to the restrictions of having to utilise people heavily in the evaluation process, and so this can make it difficult, to repeat evaluation activities.

Dr Nikolic helped to conceptualise the problem of complex adaptive system modelling, giving a great overview of what is really happening when a modeller models something. Dr Nikolic stated that any model, or any system is effectively a three-step abstraction from reality, with the level of complexity found within each of the following steps, increasing from left to right:

Computer Model <- Modeller’s Conceptualisation <- Stakeholder Understanding <- Reality

This was an interesting point to raise, and highlighted the necessity to include as many relevant stakeholders in the modelling design process to help capture as much of reality from different perspectives as possible. However, the audience agreed that stakeholder interaction and engagement, especially when thinking about the multiple actors involved in modelling complex infrastructure systems, can be one of the most challenging aspects of the modelling process.

Further during the post-lunch session of the workshop we heard about some great research efforts being undertaken in TU Delft to help develop tools and methods that can help in the modelling of complex systems. For example, Dr Gerard Dijkema, delivered a fantastic presentation on behalf of PhD student Chris Davis and others on the Enipedia database developed at TU Delft. The database contains relevant information on power generation facilities worldwide, gleaned by marrying together different linked open data sources available across the web. Clearly something of this nature is not only interesting in terms of a pure inventory of information, but is also of interest as a repository of information for energy-sector modelling purposes. The Wikipedia-style nature of the database, allows online users to edit information, as well as review different visualisations, plots, charts, maps, graphs of energy-sector information. These types of tools are now being used within TU Delft to help in further research work, and underlines the importance of trying to use consistent data sources for these areas of modelling activity.

Overall the workshop was a fantastic opportunity to see some of the research being undertaken within the Next Generation Infrastructure group at TU Delft, and subsequently further enhance potential collaboration opportunities between that group, UK ITRC and SMART, Australia. Many thanks to Margot Weijnen and her team for the invitation! It is likely that a similar workshop will be organised and hosted within the UK, for some time in 2014 to help continue building the links between the NGI, ITRC and SMART infrastructure research teams.

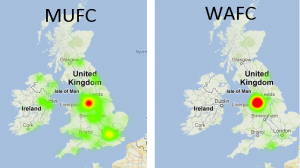

How will current socio-demographic evolution affect future transport patterns and traffic conditions?

How will current socio-demographic evolution affect future transport patterns and traffic conditions?