Inspera assessment is the University’s system for centrally supported digital exams. Inspera can be used for automatically marked exam questions, for manually marked question types including essays, or for exams with a combination of both.

New functionality has recently been launched that enables colleagues to do more with digital written exams.

Question choice for students

Candidate selected questions is used to give students taking your exam a choice of which questions to answer from a list.

For example in an exam where students need to answer 2 essay questions from a list of 6 questions, you can set this up so that a student can choose a maximum of 2 questions to answer.

How does it work for a student?

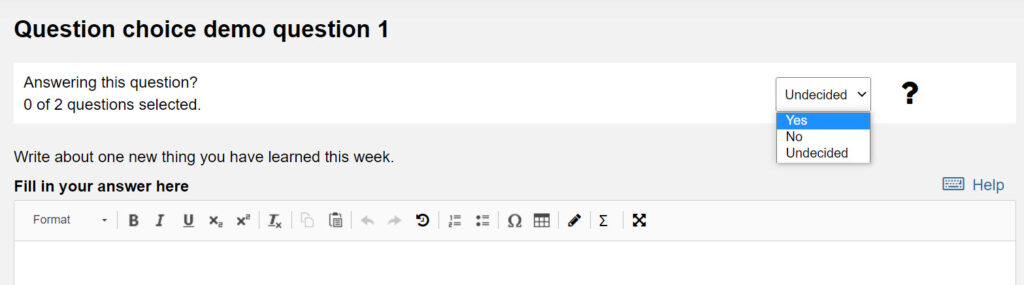

If candidate selected questions is used in an Inspera exam the student sees information above each question that shows how many questions to select in total, and how many they have already selected. To choose a question to answer they change the ‘Answering this question?’ drop down box to yes.

If a student starts answering a question without changing the ‘Answering this question?’ drop down box, Inspera automatically changes it to ‘Yes’.

When they have selected the maximum number of questions, the student cannot start answering any more questions. However, if they change their mind about which question(s) they want to answer, they can simply change the ‘Answering this question?’ drop down to no, and select a different question instead.

How does it work for a marker?

A marker only sees answers to the questions that a student has chosen to answer.

As students can only submit answers for the maximum number of questions they are allowed to choose, this means you can say goodbye to the dilemma of trying to work out which questions to mark when a student has misread the instructions and answered too many questions!

How can I use it in my exam?

The Candidate selected questions function is available when you are authoring a question set for an Inspera digital exam. Find out more in the Inspera guide for Candidate selected questions.

Rubrics for marking

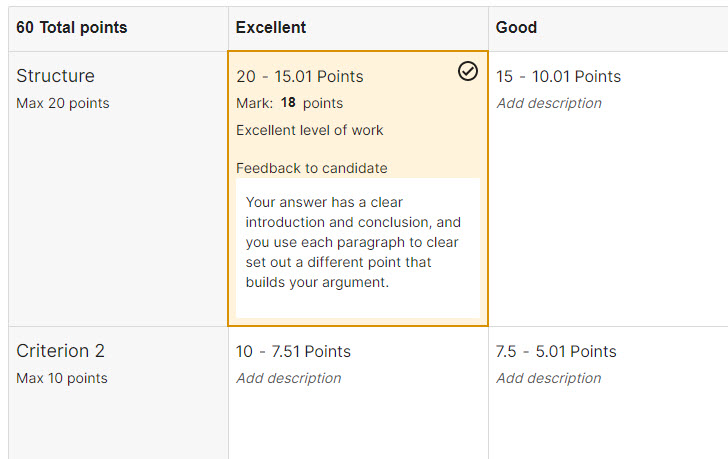

You can now create a rubric to use for marking any manually marked question type in Inspera. Rubrics allow you to build the assessment criteria for an exam question into Inspera, and use them in your marking.

Choose whether you want to use a quantitative rubric to calculate the mark for a question, or a qualitative rubric as an evaluation and feedback tool, and then manually assign the mark.

How to introduce a rubric for your exam

- When you are creating the exam question in Inspera, set up the rubric you want to use for marking that question. The Inspera guide to rubrics for question authors explains how to create a rubric and add it to your exam question.

- After the exam has taken place, use the rubric to mark the students’ answers.

- If you’ve chosen to use one of the quantitative rubric types, as you complete it the student’s mark for the question will automatically be calculated. If you’ve chosen a qualitative rubric, once you’ve completed the rubric use it to evaluate the student’s answer and help you decide on their mark for the question.

- You can choose to add feedback to the candidate in the box below the level of performance you’ve selected for each criterion (you can see an example of this in the image below).

Want to learn more about using Inspera for digital exams?

Come along to a webinar to learn about creating exam questions or marking in Inspera.

Enroll onto the Inspera Guidance course in Canvas to learn about Inspera functionality at your own pace.

Find out about the process to prepare an Inspera digital exam, and how the Digital Assessment Service can help on the Inspera webpage.

Contact digital.exams@newcastle.ac.uk if you have questions or would like to discuss how you could use Inspera for a digital exam on your module.