At our fourth bootcamp workshop we had a masterclass from the OU team on how they designed in evaluation as part of the module planning. Student feedback, a student reference group, analytic dashboards (and custom reports), and tutor reports come together to build a picture of how the module performs and informs decisions about the following presentation.

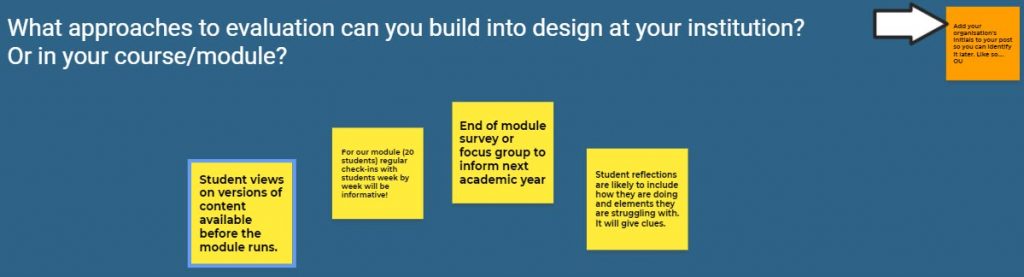

During our Jamboard exercise we thought through some of the ways we could plan evaluation into our SML module. Here we aren’t dealing with students at a distance, instead there will be lots of opportunities to actually see and hear how they are getting on:

While we don’t have a reference group we do have lots of opportunities to gather feedback:

- reviews of content pre-run

- regular informal in-class check-ins

- end of module survey / focus group

- student reflections on the process and what they are learning

- views from student reps via Student Voice / Student Staff Committees

Thinking about this further, its obvious that one size doesn’t fit all. Yes, there is a need for standard questions in centrally run surveys, but you need to know what the module is about to design how to evaluate it effectively. And, in many respects where our focus is on skills development -the “mentor” role of the academic leads will give an immediacy to feedback and permit in-flight corrections.

Parameters for a NCL Bootcamp

We have also begun the process of thinking about how we gather up our learning to present an in-house variant of the Bootcamp. Some things are clear:

- it needs to reflect our focus as a predominantly campus based university (blended is normal, online is rare)

- tools and techniques need to complement and extend our existing module approval processes

- any learning design frameworks or approaches need to be easy to pick up and easy to pass on (i.e. Made to Stick)

- centred on a mentored or action learning approach – a supported journey

- it needs a pacemaker – a structure and metronome to enable teams to complete the design (too fast and we will drop people, too slow and people will disengage)

- it needs to be offered as a pilot, and developed with feedback.

- it need to be flexible enough to support our gloriously diverse range of disciplinary cultures

We have lots of options. In some ways this is freeing – we can take the best of our current practice, add in elements that are helpful, and document other approaches to come at the design task from a different angle.

However, if we are to develop something that could have traction we need input from stakeholders, and that is what is next on the agenda.