This 2 day event advertised itself as being at the junction of computer science and learning science brought together researchers involved in a wide variety of practice across the mooc—o-sphere. The conference welcomed keynotes from Prof Sugata Mitra, Prof Mike Sharples and Prof Ken Koedinger. Presentations were generally 20 minutes long with 10 minutes scheduled for questions. Delegates came from all over the US (MIT, Stanford, Carnegie Mellon), Holland, Korea…..

The full proceedings are published here http://tinyurl.com/las2016program and the 5 flipped sessions are here: http://tinyurlcom/las16flipped

Highlights

Unsupported Apps for Literacy

Being something of a socialist I was impressed by the work of Tinsley Galyean and Stephanie Gottwald Mobile Devices for Early Literacy Intervention and Research with Global Reach who had provided apps to groups of children on android tablets to promote literacy development. An interesting feature of their study was that they used the same approach in three radically different settings. no school, low quality school and no preschool settings. Even though the apps were not supervised the students literacy (word recognition and letter recognition) improved.

Rewarding a Growth Mindset

One of the most thought provoking sessions explored how a game was redesigned to promote a growth mindset. Gamification is not a panacea and badges don’t motivate all students – Dweck’s work shows that if students have a fixed mindset points can become disincentives. Brain Points: A Deeper Look at a Growth Mindset Incentive Structure for an Educational Game is well worth a read, showing how a game was redesigned to reward resilience, effort and trying new strategies. One counter intuitive finding was that an introductory animation explaining the rational for the scoring caused players to quit – they seemed much happier just working it out as they went along. (Perhaps a warning to us not to front load anything with too much explanation!) I was particularly impreseed with the way the researchers developed a number of different versions of the game to probe the nuances of motivation. While rewarding resilient effort based approaches increased motivation, awarding points randomly had no effect at all.

Remember to measure the right thing!

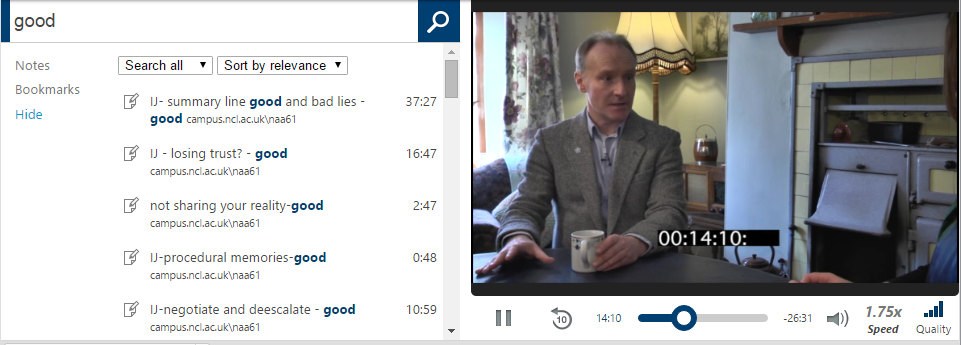

What can we learn about seek patterns where videos have in-video tests? Effects of In-Video Quizzes on MOOC Lecture Viewing Well not much really apart from the fact that learners tend to use these as seek points either seeking backwards to review content or seeking forwards to go straight to the test.

Learner’s engagement with video was explored using an analysis of transcripts as a proxy for complexity (the language, the use of figure etc). Bizarrely low and high complexity both increased dwelling time, leaving the authors questioning the value of inferring too much from any measures. Explaining Student Behavior at Scale: The Influence of Video Complexity on Student Dwelling Time They ended by throwing out a challenge about what we choose to measure and whether it is relevant at all: for example “are the number of times you pick up a pencil in class meaningful?”. We can measure it but is it of consequence?

Some MOOC platforms suggest that students have video calls using google hangouts using “TalkAbout”. Stnkewicz and Kulkmari have been exploring automated methods to indicate whether these are good conversations or not. ($1 Conversational Turn Detector: Measuring How Video Conversations Affect Student Learning in Online Classes) TalkAbout – has a helpful API that has permitted researchers to examine turn-taking in video conversations – using a change of the primary video feed as proxy for who is talking. They find that students find they learn more when they talk more and when they listen to a variety of speakers. The system has the capability to identify where calls are being dominated by one voice. Limitations were: background noise causing the video focus to switch erroneously, facilitating behaviour could be flagged as dominance, screen sharing would fix the video focus.

Cheating

Some MOOC learners use multiple accounts to harvest quiz answers.

Using Multiple Accounts for Harvesting Solutions in MOOCs explored their analysis of identifying these behaviours and attempts at minimising it. They described the “harvesting account” the one used to find answers and the master account the one used to give the right answer and get the certificate. They were able to identify the paired accounts by looking at those where the submitting the right answers shortly after the harvesting account finds out the right answer. The suspect practice was examined from log data, using the same IP address, where the master account followed the harvesting account within 30 minutes. In reality right answers were often resubmitted within seconds. A number of solutions were suggested to get around this

- Don’t give feedback on summative MCQs

- Delay feedback

- Incorporate randomness or variables into the questions (NUMBAS is a good example)

In How Mastery Learning Works at Scale Ritter and colleagues explored whether teachers followed the rules when working with Carnegie Learning’s Cognitive Tutor – a system to present students with maths material. The concept behind these are that students master key topics before moving on to new “islands” of knowledge. But doing so will ultimately result a class being distributed across a variety of topics. The reality is that Teachers can be naughty in violating rules – they unlock islands so that students study the same material at once – but this means that lower performing students do not benefit from the program – less learning happens, they are forced to move on before they have mastered the topics.

In MOOC conversations do learners join groups that are politically siloed?

The Civic Mission of MOOCs: Measuring Engagement across Political Differences in Forums Well not so apparently – there’s evidence of relatively civil behaviour, with upvoiting applying to those holding contrary view points. (This would echo our experience of FutureLearn courses being generally civil and respectful).

Automated Grading

A few of the presenters presented work where they were attempting to develop automated approaches for grading of text based work. Adaptive learning approaches where the focus was on “ranking” rather than grading appeared to be more robust, particularly if the machine learning process could work with gold standard responses and then choose additional pieces of work to be TA graded where these were uncertain.

Harnessing Peer Assessment

Generating a grade from peer marked assignments based on a mean scores was examined via a Luxemburg study where student peer grades were compared with TA grading. (Peer Grading in a Course on Algorithms and Data Structures: Machine Learning Algorithms do not Improve over Simple Baselines ) Surprisingly no more effective method was found that applying the mean of the peer grades. There was some student bias (higher marks than TAs) which could be accounted for, but the element that could not easily be overcome was the variability resulting from students’ lack of knowledge. While TAs marked some assignments poorly due to errors, students did not recognise these errors and provided a higher score. This led to an interesting discussion on those circumstances where peer grading was valid.

Flipping in a conference?

On day two the afternoon was given over to flipped sessions – even though most of the jet lagged audience had failed to engage with the materials. Of those who did it seemed that most were only really prepared to spend 30 minutes working through the content.

Peer assessment was also featured in two of the flipped sessions. In one (Improving the Peer Assessment Experience on MOOC Platforms) we looked at improvements to the workflow to include an ability to rate the usefulness of reviews. In the other (Graders as Meta-Reviewers: Simultaneously Scaling and Improving Expert Evaluation for Large Online Classrooms) the authors presented peer assessments to TAs to enable them to in effect do meta reviews. Importantly this resulted in better (more comprehensive feedback), they found it was better to present comments, not grades to reviewers to reduce bias.

Although positively received by the audience, not all the flipped presentations worked well. And as one who had tried my best over breakfast to do the preparation I wasn’t too convinced. Having said that we did find ourselves the subject of live experiment where we reviewed reviews of conference papers the previous day. One of the flipped presenters spoke of how preparing the materials in a flipped manner enabled him to make them available in an accessible form – after all not everyone wants to read an academic paper.

Keynotes

Prof Sugata Mitra presented the development of his ideas from hole in the wall through school in the cloud. The data driven audience admired his zeal but asked questions about data/evidence and visibly flinched at thoughts of times-tables being a thing of the past. “Can you really apply trigonometry by asking big questions?” One of them asked me between presentations. A point of balance came from Mike Sharples (

Effective Pedagogy at Scale: Social Learning and Citizen Inquiry) who spoke about his exploration of pedagogy at scale, the history of “Innovating Pedagogy” and rationale behind FutureLearn’s design – as a social learning platform. He was keen to point out that not all approaches worked for all domains. The challenge that Mike presented was how we make learner objectives explicit so that they meaningful inferences could be made. A really great set of slides worth a look.

Ken Koedinger’s session on Practical Learning Research at Scale at the end of the conference rounded the two days off well. You cannot see learning he posited, so beware illusions

- students watching lectures

- instructors watching students

- students reporting their learning

- liking is not learning

low correlation between students course ratings with after training skills

- observations of engagement or confusion are not strongly predictive

Summary

A fascinating two days in which we did battle with the central problems of how to measure learning; how dialogue can be hard when there are fixed views on what learning is (fundamentally cognitive or a fundamentally social); and the ongoing difficulties of providing meaningful and robust feedback at scale.