I gave a keynote talk on Real-Power Computing at DESSERT 2018 in Kiev in May 2018.

The slides in PDF are here:

https://www.staff.ncl.ac.uk/alex.yakovlev/home.formal/talks/Yakovlev-DESSERT2018-Kyiv.pdf

I gave a keynote talk on Real-Power Computing at DESSERT 2018 in Kiev in May 2018.

The slides in PDF are here:

https://www.staff.ncl.ac.uk/alex.yakovlev/home.formal/talks/Yakovlev-DESSERT2018-Kyiv.pdf

I recently discovered that there is no accurate linguistic translation of the words “Свой” and “Чужой” from Russian to English. A purely semantical translation of “Свой” as “Friend” and “Чужой” as “Foe” will only be correct in this particular paired context of “Свой – Чужой” as “Friend – Foe”, which sometimes delivers the same idea as “Us – Them”. I am sure there are many idioms that are also translated as the “whole dish” rather than by ingredients.

Anyway, I am not going to discuss here linguistic deficiencies of languages.

I’d rather talk about the concept or paradigm of “Свой – Чужой”, or equally “Friend – Foe”, that we can observe in Nature as a way of enabling living organisms to survive as species through many generations. WHY, for example, one particular species does not produce off-spring as a result of mating with another species? I am sure geneticists would have some “unquestionable’’ answers to this question. But, probably those answers will either be too trivial that they wouldn’t trigger any further interesting technological ideas, or too involved that they’d require studying this subject at length before seeing any connections with non-genetic engineering. Can we hypothesize about this “Big WHY” by looking at the analogies in technology?

Of course another question crops up as why that particular WHY is interesting and maybe of some use to us engineers.

Well, one particular form of usefulness can be in trying to imitate this “Friend – Foe” paradigm in information processing systems to make them more secure. Basically, what we want to achieve is that if a particular activity has a certain “unique stamp of a kind’’ it can only interact safely and produce meaningful results with another activity of the same kind. As activities or their products lead to other activities we can think of some form of inheritance of the kind, as well as evolution in the form of creating a new kind with another “unique stamp of that kind”.

Look at this process as the physical process driven by energy. Energy enables the production of the offspring actions/data from the actions/data of the similar kind (Friends leading to Friends) or of the new kind, which is again protected from intrusion by the actions/data of others or Foes.

My conjecture is that the DNA mechanisms in Nature underpin this “Friend – Foe” paradigm by applying unique identifiers or DNA keys. In the world of information systems we generate keys (by prime generators and filters to separate them from the already used primes) and use encryption mechanisms. I guess that the future of electronic trading, if we want it to be survivable, is in making available energy flows generate masses of such unique keys and stamp our actions/data in their propagation.

Blockchains are probably already using this “Свой – Чужой” paradigm, do they? I am curious how mother Nature manages to generate these new DNA keys and not run out of energy. Probably there is a hidden reuse there? There should be balance between complexity and productivity somewhere.

What is Real-Power Computing?

RP Computing is a discipline of designing computer systems, in hardware and software, which operate under definite power or energy constraints. These constraints are formed from the requirements of applications, i.e. known at the time of designing or programming these systems or obtained from the real operating conditions, i.e. at run time. These constrains can be associated with limited sources of energy supplied to the computer systems as well as with bounds on dissipation of energy by computer systems.

Applications

These define areas of computing where power and energy require rationing in making systems perform their functions.

Different ways of categorising applications can be used. One possible way is to classify application based on different power ranges, such as microWatts, milliWatts etc.

Another way would be to consider application domains, such as bio-medical, internet of things, automotive systems etc.

Paradigms

These define typical scenarios where power and energy constraints are considered and put into interplay with functionalities. These scenarios define modes, i.e. sets of constraints and optimisation criteria. Here we look at the main paradigms of using power and energy on the roads.

Power-driven: Starting on bicycle or car from stationary state as we go from low gears to high gears. Low gears allow the system to reach certain speed with minimum power.

Energy-driven: Steady driving on a motorway, where we could maximise our distance for a given amount of fuel.

Time-driven: Steady driving on a motorway where we minimise the time to reach the destination and fit the speed-limit regulations.

Hybrid: Combinations of power and energy-driven scenarios, i.e. like in PI (D) control.

Similar categories could be defined for budgeting cash in families, depending on the salary payment regimes and living needs. Another source of examples could be the funding modes for companies at different stages of their development.

Architectural considerations

These define elements, parameters and characteristics of system design that help meeting the constraints and optimisation targets associated with the paradigms. Some of them can be defined at design (programming and compile) time while some defined at run-time and would require monitors and controls.

First of all, I would like you to read my previous post on the graphical interpretation of the mechanisms of evolution of X and Y chromosomes.

These mechanisms clearly demonstrate the greater changeability of the X pool (in females) than the Y pool (present only in males) – simply due to the fact that X chromosomes in females merge and branch (called fan in and fan out).

The next, in my opinion, interesting observation is drawn from the notions of mathematical analysis and dynamical systems theory. Here we have ideas of proportionality, integration, differentiation, on one hand, and notions of combinationality and sequentiality on the other.

If we look at the way how X-chromosomes evolve with fan-in mergers, we clearly see the features akin to proportionality and differentiality. The outgoing X pools are sensitive to the incoming X pools and their combinations. Any mixing node in this graph shows high sensitivity to inputs.

Contrary to that, the way of evolution of Y-chromosomes with NO fan-in contributions, clearly shows the elements of integration and sequentiality, or inertia, i.e. the preservation of the long term features.

So, the conclusions that can be drawn from this analysis are:

Again, I would be grateful for any comments and observations!

PS. By looking at the way how our society is now governed (cf. female or male presidents and prime ministers), you might think whether we are subject to differentiality/combinatorics or integrality/sequentiality and hence whether we are stable as a dynamical system or systems (in different countries).

Happy Days!

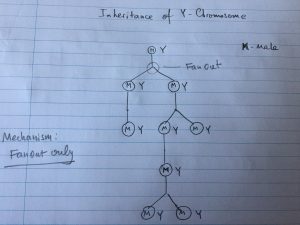

It is a known fact that men inherit both Y and X chromosomes while women only X chromosomes.

As a corollary of that fact we also know that Y-chromosomes, sometimes synonymized with Y-DNA, are only inherited by the male part of the human race. This means that Y-chromosome inheritance mechanism is only forward-branching, i.e. Y-DNA is passed from one generation to the next generation “nearly” unchanged. As I am not an expert in genetics I cannot state precisely, in quantitative terms, what this “nearly” is worth. Suppose this “nearly” is close to 100% for simplicity.

Below is a diagram which illustrates my understanding of the mechanism of inheritance of Y-chromosomes.

This diagram is basically a branching tree, showing the pathways of the Y-DNA from one generation to the future generations of males. The characteristic feature of this inheritance mechanism is that it is Fan-out only. Namely, there is no way that the Y-DNA can be obtained by merging different Y-DNAs because we have no Fan-in mechanism.

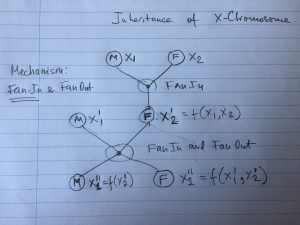

Let’s now consider the mechanism of inheritance and evolution of X-chromosomes.The way how I see this mechanism is shown in the following diagram.

X-chromosomes are inherited by both males and females. But, as I understand, this happens in two different ways.

Each female takes a portion of X chromosomes from her father (let’s denote it as X1) and a portion of X chromosomes from her mother (denoted by X2), thereby producing its own set of chromosomes X2’ which is a function of X1 and X2. Similar inheritance is in the next generation where X2’’=f(X1’,X2’).

Each male, however, only inherits X chromosomes from his mother, as shown above, where X1’’=f(X2’).

At each generation, when the offspring produced has a female, there is a merge of X chromosomes from both parents. This means that the pool of X chromosomes as we go down the generations is constantly changed and renewed with new DNA from different incoming branches.

This mechanism is therefore both Fan-in and Fan-out. And this is not a tree but a directed acyclic graph.

What sort of conclusion can we draw from this analysis? Well, I draw many interesting (to me at least) conclusions associated with the dynamics of evolution of the genetic pool of males and females. One can clearly see that the dynamics of genesis of females is much higher than that of males. Basically, one half of a male’s genesis remains “nearly” (please note my earlier remark about “nearly”) unchanged, and only the other half is subject to mutation, whereas in females both halves are changed.

I can only guess that Y-chromosomes are probably affected by various factors such as geographical movements, difference in environment, deceases etc., but these mutations are nowhere near as powerful as the mergers in the X-pool.

In my next memo I will write about the relationship between the above mechanisms of evolution and PID (proportional-integrative-differential) control in dynamical systems, which will lead to some conjectures about the feedback control mechanisms in evolution of species.

I would be grateful if those whose knowledge of human genetics is credible enough could report to me of any errors in my interpretation of these mechanisms.

Last week there was an inaugural ARM Research Summit.

https://developer.arm.com/research/summit

I gave a talk on Power & Compute Codesign for “Little Digital” Electronics.

Here are the slides of this talk:

https://www.staff.ncl.ac.uk/alex.yakovlev/home.formal/Power-and-Compute-Talk

Here is the abstract of my talk:

Power and Compute Codesign for “Little Digital” Electronics

Alex Yakovlev, Newcastle University

The discipline of electronics and computing system design has traditionally separated power management (regulation, delivery, distribution) from data-processing (computation, storage, communication, user interface). Power control has always been a prerogative of power engineers who designed power supplies for loads that were typically defined in a relatively crude way.

In this talk, we take a different stance and address upcoming electronics systems (e.g. Internet of Things nodes) more holistically. Such systems are miniaturised to the level that both power management and data-processing are virtually inseparable in terms of their functionality and resources, and the latter are getting scarce. Increasingly, both elements share the same die, and the control of power supply, or what we call here a “little digital” organ, also shares the same silicon fabric as the power supply. At present, there are no systematic methods or tools for designing “little digital” that could ensure that it performs its duties correctly and efficiently. The talk will explore the main issues involved in formulating the problem of and automating the design of little digital circuits, such as models of control circuits and the controlled plants, definition and description of control laws and optimisation criteria, characterisation of correctness and efficiency, and applications such as biomedical implants, IoT ‘things’ and WSN nodes.

Our particular focus in this talk will be on power-data convergence and ways of designing energy-modulated systems [1]. In such systems, the incoming flow of energy will largely determine the levels of switching activity, including data processing – this is fundamentally different from the conventional forms where the energy aspect simply acts as a cost function for optimal design or run-time performance.

We will soon be asking ourselves questions like these: For a given silicon area and given data processing functions, what is the best way to allocate silicon to power and computational elements? More specifically, for a given energy supply rate and given computation demands, which of the following system designs would be better? One that involves a capacitor network for storing energy, and investing energy into charging and discharging flying capacitors through computational electronics which would be able to sustain high fluctuations of the Vcc (e.g. built using self-timed circuit). The other one that involves a switched capacitor converter to supply power as a reasonably stable Vcc (could be a set of levels). In this latter case, it would be necessary also to invest some energy into powering control for the voltage regulator. In order to decide between these two organisations, one would need to carefully model both designs and characterise them in terms of energy utilisation and delivery of performance for the given computation demands. At present, there are no good ways for co-optimising power and computational electronics.

Research in this direction is in its infancy and this is only a tip of the iceberg. This talk will shed some light on how we are approaching the problem of power-data co-design at Newcastle, in a series of research projects producing novel types of sensors, ADCs, asynchronous controllers for power regulation, and software tools for designing “little digital” electronics.

[1] A. Yakovlev. Energy modulated computing. Proceedings of DATE, 2011, Grenoble, doi: 10.1109/DATE.2011.5763216

I took part in a Panel on Bio-inspired Electronic Design Principles at the

Here are my slides

The quick summary of these ideas is here:

Summary of ideas for discussion from Alex Yakovlev, Newcastle University

With my 30 years of experience in designing and automating the design of self-timed (aka asynchronous) systems, I have been involved in studying and exploiting in practice the following characteristics of electronic systems: inherent concurrency, event-driven and causality-based processing, parametric variation resilience, close-loop timing error avoidance and correction, energy-proportionality, digital and mixed-signal interfaces. More recently, I have been looking at new bio-inspired paradigms such as energy-modulated and power-adaptive computing, significance-driven approximate computing, real-power (to match real-time!) computing, computing with survival instincts, computing with central and peripheral powering and timing, power layering in systems architecting, exploiting burstiness and regularity of processing etc.

In most of these the central role belongs to the notion of energy flow as a key driving force in the new generation of microelectronics. I will therefore be approaching most of the Questions raised for the Panel from the energy flow perspective. The other strong aspect I want to address that acts as a drive for innovation in electronics is a combination of technological and economic factors, which is closely related to survival, both in the sense of longevity of a particular system as well as survival of design patterns and IPs as a longevity of the system as a kind or as a system design process.

My main tenets in this discussion are:

I will also pose as one of the biggest challenges for semiconductor system the challenge of massive informational connectivity of parts at all levels of hierarchy, this is something that I hypothesize can only be addressed in hybrid cell-microelectronic systems. Information (and hence, data processing) flows should be commensurate to energy flows, only then we will be close to thermodynamic limits.

Alex Yakovlev

11.08.2016

A nice and relevant message on Seth’s blog:

http://sethgodin.typepad.com/seths_blog/2015/06/control-or-resilience.html

not forgetting to invest in resilience is what I keep reminding in my twits.

I gave a keynote talk on “Putting Computing on a Strict Diet with Energy-Proportionality” at the XXIX Conference on Design of Circuits and Integrated Systems, held in Madrid on 26-28th November 2014.

The abstract of the talk can be found in the conference programme:

http://www.cei.upm.es/dcis/wp-content/uploads/2014/10/DCIS_2014_program.pdf

The slides of the talk can be found here:

http://async.org.uk/Alex.Yakovlev/Yakovlev-DCIS2014-Keynote-final.pdf

One of possible strategies for differentiating some types of electronics from other types is to stage a “power-modulated competition” between them, by gradually tuning power source in different ways, for example in terms of power levels, either through voltage level or/and current level, also in dynamic sense as well. The circuits that require stable and sufficiently high level of voltage will be gradually eliminated from the race … Only those who can survive through the power dynamic range context will pass through the natural selection!

Building such a test bed is an interesting challenge by itself!