People say smart contracts is the next big thing in the blockchain space. In the simplest term, a smart contract is a piece of program stored and executed in the blockchain. The fancy things about a smart contract are that its execution is (or will be) always correct (if you believe in the consensus protocol that maintains the blockchain), it is self-enforcing (executed and enforced by peers), it is trustless (no central authority) and it is cheap to use. It sounds so good, but what can smart contracts do? Of course, we want something more than ICOs. And this is I will write about.

A Short Summary in case you are impatient: we use smart contracts to implement mechanisms designed based on game theory, to enable cost-effective verifiable cloud computing. The paper (co-authored with Yilei Wang, Amjad Aldweesh, Patrick McCorry, Aad van Moorsel) was presented early this month in CCS 2017, and here are the full paper and slides.

The Need for Verifiable Cloud Computing comes from distrust. Everyone using cloud computing probably knows that “the cloud” is just a bunch of computers belongs to someone else. Then when I outsource something to the cloud, how can I be sure it is done properly in the cloud? In current practice, I cannot. You can imagine how annoying this would be when that outsourced computation is important to me. It is not necessary that the clouds are malicious, it is simply a consequence of uncertainty: I do not know what happens exactly in the clouds, and I have no control over that either. So the best I can do, as a matter of due diligence, is not to trust the clouds and verify all results returned by the clouds. But how? Verification can be as expensive as recomputing the task, and I might not have the resource to do that (if I have, I can avoid using the cloud in the first place by computing it by myself).

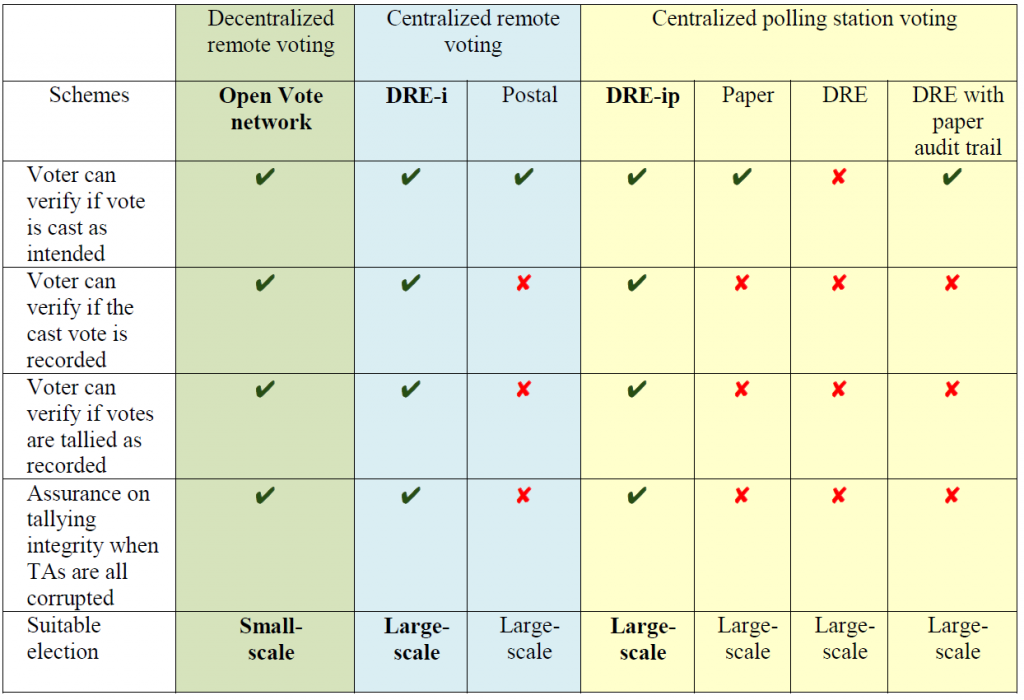

The Current State of verifiable computing is more or less divided into two streams. Some verify by using cryptography, some verify by using replication. In the cryptography based approach, the cloud must generate a proof that the computation is done correctly. Cryptography ensures that, unless our beloved cryptographic assumptions are wrong, the cloud cannot generate a valid proof if the computation is wrong. By checking the proof, I can be assured the correctness of the computation. In the replication based approach, I give the same task to several clouds, and later collect results from them, and cross-check the results. If the results from all replicas match, I can assert with a high confidence that the computation was done correctly. Of course the more replicas I use, the more reliable my assertion would be. More replicas can also help me to find the correct result, should there is something wrong in some replicas.

What is Missing in all existing verifiable computing techniques is a sense of economy. Surely they are technically sound, but with an unaffordable price. The problem is that cloud is not free. You pay for what you compute. Generating a cryptographic proof is much more expensive than what you would think. Currently, the overhead is 3 – 6 orders of magnitude more than the computation being verified. Simple primary school math:

- The costs of my computation: £500 per month

- The costs of getting the proofs: £500 * 1000 = half a million per month

- What I get: bankruptcy and out of business

For replication based approach, since I have to pay each of the replicas, the cost is blown up by a factor that equals the number of replicas. Of course, it soon becomes unaffordable when the factor grows up.

One, perhaps the most important, reason people want to use cloud computing is cost saving. When there is no advantage in term of cost over on-premises IT infrastructure, which you have control and don’t need to worry much about correctness, many would not be that keen on the cloud.

The Question then is: can we have cost-effective verifiable cloud computing after all? Well, for cryptography based approach, I am a bit pessimistic. The gap is just too big. Unless there is a big breakthrough, we won’t be able to use it in practice in the near future. For replication based approach, the might be some hope, if the number of replicas we pay is small. How small the number can be? The least is 2. In fact, that might work. The idea is that using cloud computing is cheaper than using your own trusted on-premises IT infrastructure. “Two cloud replicas” means doubling the cost, and cost-wise this may not differ much or may be even lower than using your trusted IT infrastructure. Given the other good qualities cloud computing processes, people would have the motivation to use the cloud.

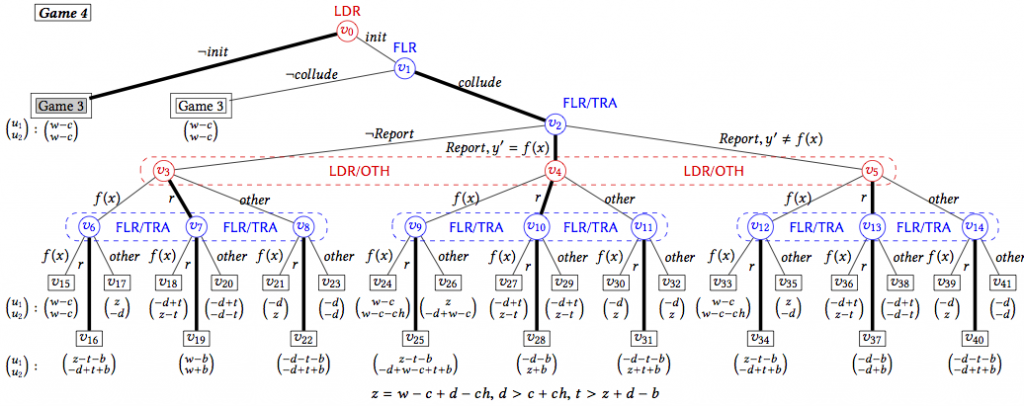

This is straightforward, but why has not anyone came up with something? Let us forget all engineering difficulties such as synchronization, replication, latency etc., and focus on the idea. It has a fatal weakness: collusion. In replication based approach, verification is done by comparing the results. What if the two clouds collude and give you the same wrong result? You know nothing and you cannot verify anything. Can the clouds collude? Of course they can. Remember, it is not about whether they will collude or not, it about whether you believe they will collude or not. You don’t trust the clouds, then collusion is a threat to you. In the face of collusion, verification based on 2 replicas is insecure.

How to Prevent Collusion is then our objective. The technical details will follow. A spoiler from the abstract of the paper: a client “uses smart contracts to stimulate tension, betrayal and distrust between the clouds, so that rational clouds will not collude and cheat”.