At the 8th CSF Workshop on Analysis of Security API (13 July, 2015), I presented a talk entitled “on the Trusted of Trusted Computing in the Post-Snowden Age”. The workshop produces no formal proceedings, but you can find the abstract here. My slides are available here.

In the field of Trusted Computing (TC), people often take “trust” for granted. When secrets and software are encapsulated within a tamper resistant device, users are always educated to trust the device. The level of trust is sometimes boosted by the acquisition of a certificate (based on common criteria or FIPS).

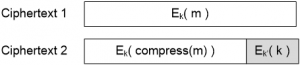

However, such “trust” can be easily misused to break security. In the talk, I used TPM as an example. Suppose TPM is used to implement secure data encryption/decryption. A standard-compliant implementation will be trivially subject to the following attack, which I call the “trap-door attack”. The TPM first compresses the data before encryption, so that it can use the saved space to insert a trap-door block in the ciphertext. The trap-door block contains the decryption key wrapped by the attacker’s key. The existence of such a trap-door is totally undetectable so long as the encryption algorithms are semantically secure (and they should be).

To the best of my knowledge, no one has mentioned this kind of attack in the literature. But if I were NSA, this would be the sort attack that I would consider first. It is much more cost-effective than investing on quantum computers or parallel search machines. With the backing of the state, NSA could coerce (and bribe) the manufacturer to implant this in the TPM. No one will be able to find out since the software is encapsulated within the hardware and protected by the tamper resistance. In return, NSA would have the exclusive power to read all encrypted data at a mass scale with trivial efforts in decrypting data.

To the best of my knowledge, no one has mentioned this kind of attack in the literature. But if I were NSA, this would be the sort attack that I would consider first. It is much more cost-effective than investing on quantum computers or parallel search machines. With the backing of the state, NSA could coerce (and bribe) the manufacturer to implant this in the TPM. No one will be able to find out since the software is encapsulated within the hardware and protected by the tamper resistance. In return, NSA would have the exclusive power to read all encrypted data at a mass scale with trivial efforts in decrypting data.

Is this attack realistic? I would argue yes. In fact, according to Snowden revelations, NSA has already done a similar trick by implanting an insecure random number generator in the RSA products (NSA reportedly paid RSA US$10m). What I have described is a different trick, and there may well be many more similar ones.

This attack highlights the importance of taking into account the threat of a state-funded adversary in the design of a Trusted Computing system in the post-Snowden age. The essence of my presentation is a proposal to change the (universally held) assumption of “trust” in Trusted Computing to “trust-but-verify”. I gave a concrete solution in my talk to show that this proposal is not just a theoretical concept, but is practically feasible. As highlighted in my talk, my proposal constitutes only a small step in a long journey – but it is an important step in the right direction I believe.

Topics about NSA and mass surveillance are always heavy and depressing. So while in Verona (where the CSF workshop was held), I took the opportunity to tour around the city. It was a pleasant walk with views of well-preserved ancient buildings, the sunny weather (yes, a luxury for someone coming from the UK) and familiar sound of cicadas (which I used to hear every summer during my childhood in China).

The Verona Arena is the area that attracts most of the tourists. The conference organizers highly recommended us to attend one of the operas, but I was eventually deterred by the thought of having to sit for 4 hours and listen to a language that I couldn’t understand. So I decided to wander freely. As I entered a second floor of a shop that sold hand-made sewing items, my attention was suddenly drawn by someone who passionately shouted while pointing figures toward outside the window, “Look, that’s the balcony where Juliet and Romeo used to date!” Wow, I was infected by the excitement and quickly took a photo. In the photo below, you can see the Juliet Statue next to the balcony. (Of course a logical mind will question how this dating is possible given that the two people are just fictional figures, but why should anyone care? It was in Verona, a city of legends.)