On my recent trip to Arizona for CCS 2014 I took some time out to fly paragliders in the desert with Arizona Paragliding. Turns out that they also design and manufacture the SlingMachine towing winch that is used to transport paraglider pilots safely the desert floor to 3,000 feet in a matter of minutes. When I arrived Sean Buckner the designer and creator of SlingMachine was in the process of automating the towing winch. Sean had already designed and built the electronics for the automated control system on the SlingMachine and I helped design and build the software for the automated control box.

This is me being towed to into the air by the SlingMachine controlled by software designed and written by myself and Sean Buckner:

It felt amazing to be towed into the air by my own software and when we perfected the software I could to actually feel changes we were making to the software.

This is very cool but you may be wondering how is this relevant to software reliability and security; the end goal of the project is to develop embedded devices (hardware and software) that put some science into improving the safety of paragliding as a sport.

We have made a start by creating an automated control box (with associated software) for the SlingMachines winch. The automated control box makes the towing process more repeatable (delivering the same tow every time) and thereby safer because it is less prone to certain categories of operator error. We are also developing a pilot telemetry pack to increase pilot safety on the towing line and a novice pilot telemetry pack to help with pilot safety during training flights.

This blog entry about what I feel is a cool software project with obvious safety and reliability issues. It is also a call for help, to the members of the Secure and Reliant Systems (SRS) and Centre for Software Reliability (CSR), we are continuing to develop the software and create new projects that are designed to improve safety in paragliding.

So my question to you guys, is how do we development ultra-reliable software, quickly with minimal manpower?

What does the software do?

The software we wrote controls the winch towing the paraglider pilot into the air, and as the pilot on the end of the tow line you want to know that the software is going to perform reliably and not do anything unexpected.

We only had a short time to implement the software and very limited manpower, this creates a problem as high integrity software development techniques significantly increase the development time / manpower required. So we had to get smart to develop highly reliable software in a rapid application development (RAD) scenario. To achieve this we applied these six principles:

- Human operator override; this is an essential safety feature in our approach to developing and testing the software. The human tow operator can, at any point, flick a switch to override the software and take control of the towing process. Just like the pilot on modern jet liner who has the ability to override the autopilot when the situation exceeds the conditions the autopilot can deal with. In both systems the human is the final safety backstop.

- Keep it simple; don’t over complicate the software.

- Build test environment for the control box; this consisted of a set of potentiometers and switches that replicated the inputs of the sensors on the SlingMachine winch. We could set the dials to replicate any given situation on a tow without actually launching a glider.

- Split the software into small self contained functional elements, each of which can be implemented and tested separately. We would complete each element and lock it off before moving onto the next.

- Test, test and test again… structured edge case testing allows us to repeatedly test situations that may only occur once in a thousand tows. This means that when that 1 in 1,000 case occurs the software will handle it.

To achieve this we built a static test environment for the control box, which mimicked the input from the sensors on the towing winch using potentiometers and switches. The test environment allows us to set up those 1 in 1000 scenarios on the bench rather than in the field.

- Good old fashioned debug text output; the Arduino micro controller, on which the software was built, can output a constant stream of debug text over the serial line to a PC which records the data. This gives us a text log of each line of code the Arduino runs. We can then analyse the test log after each test run to verify that the software operated as expected given the inputs from the tow operator and the pressures exerted on the tow line by the glider pilot.

Our strategy delivered ultra reliable software in a very short time with minimal manpower resources. However this does pose a very important the question; was the quality of the software we produced improved by the fact that we were flying the test flights towed by our own software?

Software Design

The physical design of the SlingMachine is based on a variable friction tow line pay out systems. This design means that when the glider exerts more force on the tow line the winch will increase the rate at which the tow line is released thereby smoothing out towing experience for the paraglider pilot.

In addition the skill and experience of the towing operators Sean and Abe Heward ensures a safe and smooth ride to 3,000 feet every time.

So what does the software automate?

To automate the towing process we needed to capture the knowledge inside the head of SilngMachine’s creator/designer Sean and condense that into software. The objective being to create software that can deliver as safe and reliable a tow as the human operator.

By no means does the software replace the tow operator, as the operator is performing the complex task of monitoring the flight of the pilot and glider to ensure that they are flying safely. The software takes over the monitoring the towing pressure exerted on the glider and adjusting the towing speed to compensate for changes in the wind speed / direction and the effect of thermals.

This allows the tow operator to concentrate on how the pilot is flying; focussing on whether to proceed with the tow or to abort the tow if the pilot gets into difficulties. For instance, during the launch phase while the pilot is running along the ground, verifying that the paraglider wing is flying straight and level above the pilot’s head before applying the additional tow pressure to lift the pilot into the air.

So why is the towing pressure exerted on the pilot important?

Because the air is lumpy; ultra-light aircraft, such as paragliders and hang gliders, are very sensitive to wind gusts, wind shifts and to rising/descending air caused by thermals. The air through which the glider is travelling can change speed and direction relative to the towing force, which is attached to the ground. This causes the force excreted on the glider to change rapidly even though the speed of the towing vehicle and the force applied by the towing winch remain the same.

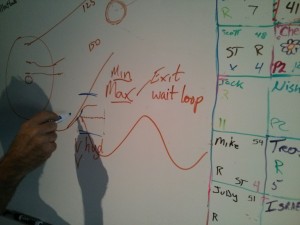

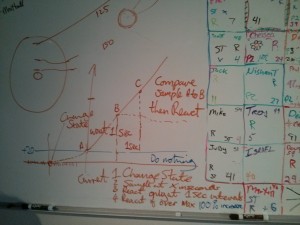

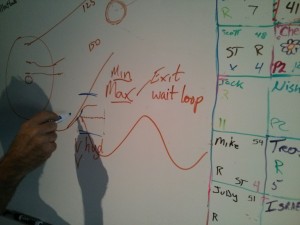

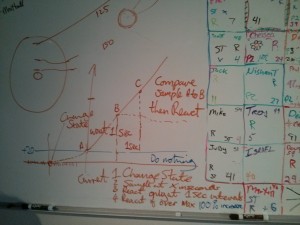

These two photos show a whiteboard design session where we are discussing the a mild feedback oscillation created when the pilot has several hundred feet of altitude, meaning that there is several hundred feet of tow line between the pilot and the towing winch. The control box detects a change in force exerted on the tow line and alters the towing pressure accordingly, however there is a delay between the change in towing resistance and the resultant effect on the glider. The control box must compensate for this delay otherwise it causes a feedback oscillation, which the pilot feels as a gentle pull and relax, pull and relax motion. What was really cool for me was being the pilot on the end of the tow line, feeling that oscillation and knowing exactly what the software was doing and why. Thinking to myself when I get back on the ground I know how to fix that.

Whiteboard session 1

Whiteboard session 2

The SlingMachine control box

The control box has 2 dials to set (1) the target towing resistance based on the pilot weight (2) the target towing force adjusting for wind shifts and thermals experienced by the glider. There are 5 mode switches for the different stages of the tow:

Pre-tension;

Puts tension on the towing line prior to launch

Pre-launch;

Increases the line tension assisting the pilot whilst transitioning from pilot stationary and glider on the ground to pilot running on the ground with the glider flying above his/her head

Launch; once the glider is flying straight and stable, increase the line tension to lift the pilot into the air and gain some initial altitude

Towing;

This is the main body of the tow when the pilot will gain the majority of the altitude

Rewind;

When the tow is complete and the pilot releases the towline the winch automatically rewinds the towing line.

The control box is based on the Arduino Mega 2560 micro controller, which is perfect for the task of constantly monitoring several analogue input channels and setting the appropriate outputs to control devices in the physical world.

What lessons did I learn?

Developing this software was the most fun and the biggest challenge.

We developed highly reliable software in a short space of time with minimal resources, however in my view this was only possible on a small scale project such as the SlingMachine towing software. This is because the software is performing a relatively simple and well defined set of tasks; the software monitors the towing pressure applied to the pilot and adjusting it to maintain the optimum in changeable flight conditions.

The five principles we used to develop the software focused on creating ultra reliable software with the limited resources at our disposal. The principles were based on common sense and 25+ years of developing high integrity systems.

Given my background in high integrity systems the next step for the SlingMachine software is to run extensive edge case testing on the software. To this end we have built a test rig for the control box, with which we can feed in sensors readings as if they came from the SlingMachine winch. The sensor readings can come from 2 sources:

- We can dial in sensor readings with potentiometers. This allows us to test the edge cases that may only occur in 1 in 1,000 tows.

- We can playback real input sensor readings recorded from actual tows of real pilots. These recordings allow us refine the software algorithms comparing the performance of the new software revisions against the recording of the old software. The advantage of using recorded sensor readings to test the software is that the tests are as close to real life as possible without a real pilot having to leave the ground.

So What Next?

The initial software development was done in 3 weeks, which was the duration of my visit to Arizona, during which the primary aim was to get the software operational before the end of my trip. We are now developing new embedded hardware / software modules to monitor live telemetry from the pilot and further enhance pilot safety.

Tow pilot telemetry pack:

Which monitors the flight of the paraglider pilot and relays that information to the tow operator at the controls of the winch. This gives the tow operator much better picture of whether the pilot is flying well or if things are starting to go outside the norm. This is especially valid when the pilot has gained some altitude and so is further away and the visual signs that the pilot is getting into difficulties are much harder to see.

Novice pilot telemetry logging pack;

Once a novice pilot has released from the tow line they are in free flight under the instruction (over the radio) from an instructor on the ground. The novice pilot has a number of tasks to do on each of their free flights and these tasks would usually be done at the highest possible altitude as this gives the greatest safety margin when trying new manoeuvres. The recorded telemetry allows the instructor to better understand what the pilot did during their flight – pilot position, height, turning forces, rate of climb / decent and lateral acceleration.

This data also allows the instructor to verify that the novice pilot has completed the assigned task when the pilot might be at several thousand feet when the task is performed. It may also be the case that glider has done something the novice pilot did not expect during the flight, either because of external forces (wind or thermals) or because of the over active input from the pilot, instructor can also use the telemetry data to help the novice understand what happened and what to do in the future.

Novice pilot safety pack;

This will be an extension to the novice telemetry pack which will use the input from the sensors such as the accelerometer and barometric altimeter to identify when the pilot gets into unsafe situations. For instance sounding a warning if the pilot performs aggressive turns at low an altitude or if the pilot is performing an increasingly aggressive spiral turn greater than 3600

The development strategy we have used so fare for the development of the SlingMachines control box has worked well so far. We are very open to suggestions for a development strategy that will enhance the security and reliability of pilot safety projects and the continuing development of the control box as we move forward in the development and the software gets more complex.

Thanks,

Martin Emms