ISG will be based in Black Horse House (next to the Marjorie Robinson Library) from the week commencing October 8th 2018. The Claremont complex, home to the various guises of the university computing service since opening in 1968 is going to be completely refurbished. Farewell Claremont, thank you for the last 50 years!

Category Archives: Uncategorized

Office365 and SPAM filtering

We’ve started the process of migrating staff email to Office 365 (see http://www.ncl.ac.uk/itservice/email/staff/upgradingtomicrosoftoffice365/). We moved the IT Service this week – not totally without problems but that’s one reason we started on ourselves.

We’ve had some feedback that people are getting more spam since the move which surprises us. We’re using Office 365 in hybrid mode which means that all mail from outside the University comes through our on-site mail gateways (as it always has) before being delivered to the Office 365 servers.

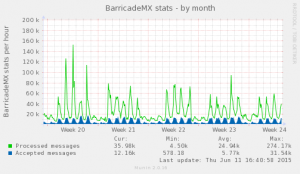

The graph below shows stats for the last month – we’re still rejecting over 80% of the messages that arrive at the gateways. We know this isn’t catching everything but there’s a dramatic difference between an unfiltered mailbox to a filtered one.

Infrastructure issues (part 2)

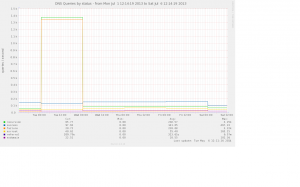

Back in March we had performance issues with our firewalls. One of the things that our vendor raised was what they saw as an unusually high number of DNS queries to external servers. We were seeing around 2-3000 requests/second from our caching DNS servers to the outside world.

A bit more monitoring (encouraged by other sites reporting significantly lower rates than us) identified a couple of sources of unusual load:

1. The solution we use for filtering incoming mail sends DNS queries to all servers listed in resolv.conf in parallel. That doesn’t give any benefit in our environment so we changed things so that it only uses the caching DNS server on localhost.

2. We were seeing high rates of reverse lookups for IP addresses in ranges belonging to Google (and others) which are getting SERVFAIL responses. These are uncacheable so always result in queries to external servers. To test this theory I installed dummy empty reverse zones on the caching name servers and the queries immediately dried up. The fake empty zones meant that the local servers would return a cacheable NXDOMAIN rather than SERVFAIL.

An example of a query that results in SERVFAIL is www.google.my. [should be www.google.com.my]). That was being requested half a dozen times a second through one of our DNS servers. www.google.my just caught my eye – there are probably many others generating a similar rate.

Asking colleagues at other institutions via the ucisa-ig list and on ServerFault reinforced the hypothesis that (a) the main DNS servers were doing the right thing and (b) this was a local config problem (because no-one else was seeing this).

Turned on request logging on the BIND DNS servers and used the usual grep/awk/sort pipeline to summarise – that showed that most requests were coming from the Windows domain controllers.

Armed with this information we looked at the config on the Windows servers again and the cause was obvious. It was a very long-standing misconfiguration of the DNS server on the domain controllers – they were set to forward not only to a pair of caching servers running Bind (as I thought) but also all the other domain controllers which would in turn forward the request to the same set of servers. I’m surprised that this hadn’t been worse/shown up before since as long as the domain returns SERVFAIL the requests just keep circulating round.

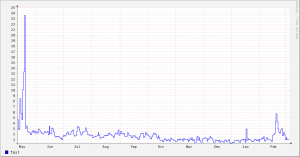

The graph below shows the rate of requests that gave a SERVFAIL response – note the sharp decrease in March when we made the change to the DNS config on the AD servers. [in a fit of tidiness I deleted the original image file and now don’t have the stats to recreate it – the replacement doesn’t cover the same period]

I can see why this might have seemed like a sensible configuration at the time – it looks (at one level) similar to the idea of a set of squid proxies asking their peers it they already have a resource cached). Queries that didn’t result in SERVFAIL were fine (so the obvious tests wouldn’t show any problems).

Postscript: I realised this morning that we’d almost certainly seen symptoms of this problem early last July – graph below shows the very sharp increase in requests followed by the sharp decrease when we installed some fake empty zones. This high level of requests was provoked by an unknown client on campus looking up random hosts in three domains which were all returning SERVFAIL. Sadly we didn’t identify the DC misconfiguration at the time.

Recent infrastructure issues (part 1)

It’s not been a great few months for IT infrastructure here. We’ve had a run of problems which have had significant impact on the services we deliver. The problems have all been in the foundation services which means that their effects have been wide-ranging.

This informal post is aimed at a technical audience. It’s written from a IT systems point of view because that’s my background. We’ve done lengthy internal reviews of all of these incidents from technical and incident-handling viewpoints and we’re working on (a) improving communications during major incidents and (b) making our IT infrastructure more robust.

Back in November and December we had a long-running problem with the performance and reliability of our main IT infrastructure. At a system/network level this appeared to be unreliable communications between servers and the network storage they use (the majority of our systems use iSCSI storage so are heavily reliant on a reliable network). We (and our suppliers) spent weeks looking for the cause and going down several blind alleys which seemed very logical at the time.

The problems started after one of the network switches at our second data centre failed over from one controller to the stand-by controller. There were no indications of any problems with the new controller so theory was that something external had happened which caused the failover _and_ lead to the performance problems. We kept considering the controller as a potential cause but discounted it since it reported as healthy.

After checking the obvious things (faulty network connections, “failing but not yet failed” disks) we sent a bundle of configs and stats to the vendor for them to investigate. They identified some issues with mismatched flow control on the network links. Theory was that this had been like this since installation but only had significant impact as the systems got busier. We updated config on both sides of link and that seemed to give some improvement but obviously didn’t fix the underlying problem. We went back to the vendor and continued investigations across all of the infrastructure but nothing showed up as a root cause.

Shortly before the Christmas break we failed over from the (apparently working) controller card in the main network switch at our second data centre to the original one – this didn’t seem logical as it wasn’t reporting any errors but we were running out of other options. However (to our surprise and delight) this brought an immediate improvement in reliability and we scheduled replacement of the (assumed) faulty part. We all gave a heavy sigh of relief (this was the week before the University closed for the Christmas break) and mentally kicked ourselves for not trying this earlier (despite the fact that the controller had been reporting itself as perfectly healthy throughout).

At the end of January similar issues reappeared. Having learnt our lesson from last time we failed over to the new controller very quickly – this didn’t have the hoped-for effect but we convinced ourselves that things were recovering. In hindsight improvement was because it was late on Friday afternoon and the load was decreasing. On Saturday morning things were worse and the team reassembled to investigate. This time we identified one of a pair of network links which was reporting errors. The pair of links were bonded together to provide higher bandwidth and a degree of resilience. We disabled the faulty component leaving the link working but with half the usual throughput (but still able to handle normal usage) and this fixed things (we thought). Services were stable for the rest of the week but on Monday morning it was clear that there was still a problem. At this point we failed back to the original controller and things improved. Given that we were confident that the controller itself wasn’t faulty (it had been replaced at the start of the month) the implication was that there was a problem with the switch which is a much bigger problem (this is what one of these switches looks like). We’re now working with our suppliers to investigate and fix this with minimal impact on service to the University.

In the last few weeks we’ve had problems with the campus network being overloaded by outfall from an academic practical exercise, a denial of service attack on the main web server and thousands of repeated requests to external DNS servers causing the firewall to run out of resource – but they’re stories for another day.

Problem (bug?) deleting folders in Outlook 2013

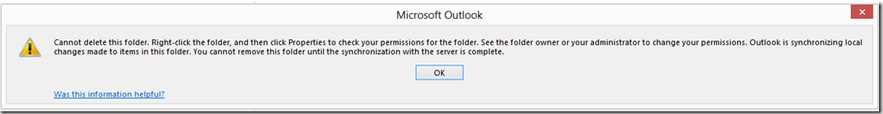

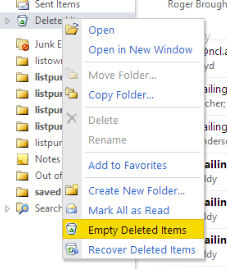

Doing some tidying up of a mailbox the other day, and wanted to delete some empty folders from the Deleted Items (i.e. permanently delete them). For some reason Outlook gave me the following error:

Opening up Deleted Items and trying to delete each folder in turn gave me this slightly different one – but equally frustrating :

Attempts to permanently delete the items (using SHIFT and Delete) drew a blank – same problem.

Playing with the folder permissions (via the properties pane) – no joy.

Clearly this is a bug in Outlook (and not anything I was doing wrong). Workaround was to log into the mailbox using Outlook Web Access (OWA) and trying it from there – no problem.

It would seem that the synchronisation logic that the full client uses is buggy – it’s useful to remember that you can sometimes do things in OWA that you cannot with Outlook if you run into trouble.

Web proxy changes (reverted)

Unfortunately we’ve had to backout the change to the proxy config. We found that Windows XP clients didn’t handle the change properly and lost access to external web sites.

The good news is that the vast majority of clients worked fine so once we’ve developed a plan for handling the older machines we’ll try again (in the new year).

Web Proxy Changes

On Monday 16th December 2013 we’ll be changing the content of the proxy auto configuration (PAC) script that web browsers and other applications use to automatically configure use of a web proxy. The web proxies have been unnecessary for web access since the introduction of NAT at our network border and this change will reduce the number of active clients using them.

The current PAC script provides this configuration (simplified for clarity):

function FindProxyForURL(url, host)

{

return "PROXY 128.240.229.4:8080";

}

This configures web clients to proxy their requests through our load-balanced proxy address at 128.240.229.4. The new PAC config will be:

function FindProxyForURL(url, host)

{

return "DIRECT";

}

This will configure clients to not use a proxy and just fetch content directly.

We’ve scheduled this change purposely to occur during a quiet time on campus to avoid major inconvenience should any problems arise, however internal testing in ISS over the past few months has shown that this change should be transparent to users.

If you’re aware of any applications or systems that currently have manually set proxy addresses (eg, “wwwcache.ncl.ac.uk”) these can now be removed prior to the eventual full retirement of the web proxies late in 2014.

Disappearing messages to lists

We had a question last week about some messages sent to a local mailing list not reaching the members of the list. When we looked at the logs on the list server we saw that the messages were being discarded as duplicates/loops. This is an explanation of why this happens and how to avoid it.

Every mail message has identifying label associated with it which should be globally unique. This label is called a message-id (commonly shorted to msgid). The system we use to run our mailing lists (Sympa) relies on this to stop looping messages being sent to a list repeatedly. In the version we use at the moment the list of msgids that have been seen is only cleared out when the server is restarted for maintenance – this happens irregularly (later versions expire entries in the cache after a fixed time). This is a reasonably common technique to protect lists for mail-loops – I remember implementing it in the locally written MLM when I worked at Mailbase.

The system deliberately sidelines the message silently because it thinks this is a possible loop and sending a message to the sender has a fair chance of making things worse.

Unfortunately some mail programs will create messages with identical msgids. I believe that some versions of Outlook do this if you use the “Resend” option on an existing message. The workaround is to not use “Resend” unless you’re resending a message that failed to deliver. Some old versions of the Pine mail program generated duplicates occasionally because they used the current time to create the msgid but missed out one of the components(hours, minutes or seconds – can’t remember which).

We’ve found another instance in which Outlook will send messages with identical msgids and that’s using templates. If you use Outlook templates in non-cached mode (more specifically if you use a template created when in non-cached mode) then messages created from that template will all have the same msgid. See discussion at http://social.technet.microsoft.com/Forums/en-US/exchangesvrtransport/thread/890412c8-f992-4973-b504-f1d069b0266f/

The suggested workaround for this is to change to using Outlook in Cached mode (see http://www.ncl.ac.uk/itservice/email/staff-pgr/outlook/cachedexchangemode/) and then recreate the templates (you need to create new templates because the fault is attached to the template). If for some reason cached mode isn’t suitable all the time (for example if you regularly use different desktop machines) you just need to turn it on when creating the template.

Painless document sharing/collaboration with change control

We’ve offered the ISS Subversion Service for some time now, allowing groups of people to share and collaborate on groups of files whilst maintaining full change control. The only downside to this service is that it generally requires the users to have at least some idea of the concepts behind version control systems.

I’ve recently discovered an interesting open-source software project called SparkleShare which provides the same functionality to groups of people working on the same set of files but manages the change control work in the background, using a client similar to the Dropbox client. Changes to files get automatically committed into the repository and synced to all users. The SparkleShare client is available for Windows, Mac OSX and Linux and uses a git repository as the backend store. As git is available on “aldred”, our Linux public time sharing server, you can use an ISS Unix account as a git repository for a group using SparkleShare.

After installing the client, simply paste the contents of the key file in your SparkleShare main folder into your Unix ‘.ssh/authorized_keys’ file. Then create a new git repository in your Unix home directory (eg: git init --bare ~/myfirstrepo) and then in the SparkleShare client add a new project with address ssh://userid@aldred.ncl.ac.uk and remote path ~/myfirstrepo. Done!

SkyDrive users – act now to keep 25GB for free

Microsoft have announced changes to Windows Live SkyDrive, introducing paid tiers of storage and reducing the free offering down to 7GB. Existing users can (for a limited time) login and hit a button to keep the 25GB that you currently have, for free.

Go and do that now: skydrive.live.com

Once you’ve done that, you might want to download the long-awated SkyDrive client applications that have been released for Mac and PC.