Last week, Jonathan Noble and I attended the WinOps 2016 conference in London; this was a conference centred around the subject of using DevOps working practices with Windows Servers, which is something that Microsoft are focusing a lot of effort on, and something that ISG have taken a lot of interest in. I’ve been told that videos of the talks will soon be available on http://www.winops.org, and I would strongly recommend them for anyone who works with Windows Servers in any capacity. (Update: videos are now available at https://www.youtube.com/playlist?list=PLh-Ebab4Y6Lh09SnM63euerPW0-pauO7k).

The day started with a keynote speech by Jeffrey Snover, from Microsoft; I’m not sure of his current job title as it keeps changing, but he invented PowerShell and is basically in charge of Windows Server.

The speech covered the evolution of Windows Server from Windows NT, right through to Server 2016, explaining how the product was continuously changed to meet the needs of the time, which flowed nicely into an overview of Server 2016, designed to enable cloud workloads.

A big part of Server 2016 is the concept of ‘Just Enough Operating System’ and the new Nano Server installation option. For those not aware, Nano Server is the next logical step after Server Core; where Server Core removed the Desktop Experience, in order to improve the security, reliability, and speed of your servers, Nano Server strips out absolutely everything unnecessary. It’s not possible to login to a Nano Server in any way – they’re controlled entirely by remote management tools, and PowerShell Remoting. This has enabled Microsoft to shrink the Operating System down to under 500MB. It takes up less space, runs faster, boots in seconds, and requires only a small fraction of the number of patches and reboots that Server with Desktop Experience requires. Jeffrey went as far as to say that Nano Server is “the future of Windows Server.”

Also coming with Server 2016 is support for Docker-compatible Containers. If you’re not familiar with these, it’s worth getting acquainted – one server can run multiple containers, and each will function as if they were their own server, completely isolated from each other, but sharing the underlying operating system and other resources from the host machine. The container itself is a single object, making it very simple to transfer between hosts, or to duplicate and spin up multiple copies of a containerised application.

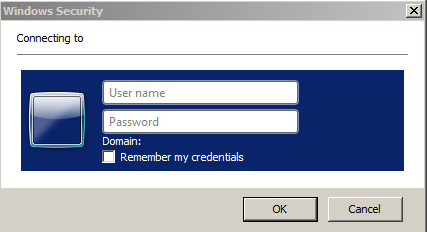

A couple of other important technologies touched upon were Windows Server Apps (WSA) – a new way of deploying applications based on AppX; Server Support for MSI will become deprecated in favour of WSA, largely because MSI is horrible and unsuitable for server environments – and Just Enough Administration (JEA) – a new PowerShell feature which allows the creation of PowerShell endpoints which users can connect to perform a specified subset of admin tasks, without requiring to be administrators on the target server (even if the tasks would usually require it); this means that you don’t need to hand over the keys to your kingdom in order to let someone perform a few updates or run backups.

The second talk of the day was by Iris Classon, a C# MVP who works for Konstrukt. Iris’s talk was entitled “To The Cloud” and discussed the journey that her company made while moving their services to Azure. Key points of the talk were discussions around the automation of manual processes, such as unit testing, integration testing, and operational validation testing, as well as deployment. She also advocated heavily for using JEA (mentioned above) to prevent system administrators from having access to sensitive data that they didn’t need to see.

The third talk of the day was by Ed Wilson, who works on Microsoft’s new Operations Management Suite (OMS), and is the author of the Hey, Scripting Guy! blog. The talk was primarily an overview of OMS, which is a suite of tools designed to offer Backup, Analytics, Automation, and Security Auditing for hybrid cloud/on-premises environments. OMS is constantly under active development with new features coming online all the time, so it’s definitely worth keeping an eye on. Highlights so far are:

- OMS Automation (formerly Azure Automation), which has been described as PowerShell as a Service – it offers a repository where PowerShell run books can be stored and run on a schedule.

- Secure Credential Store – exactly what it sounds like – store credentials securely so that you can use them from the rest of OMS.

- Windows and Linux machines are supported for monitoring (as well as anything else that can output a text-based log file).

Fun fact mentioned in this talk: PowerShell is now ten years old. Probably time to pick it up if you haven’t yet done so 😉

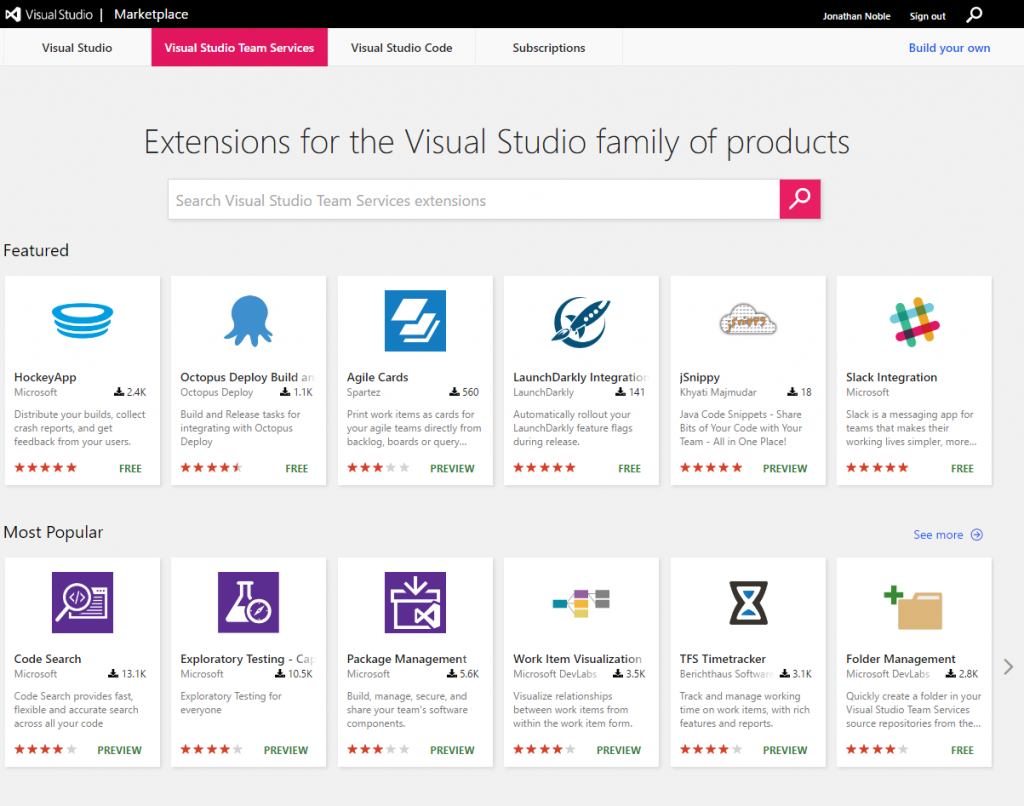

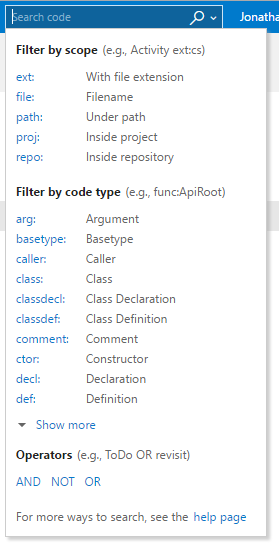

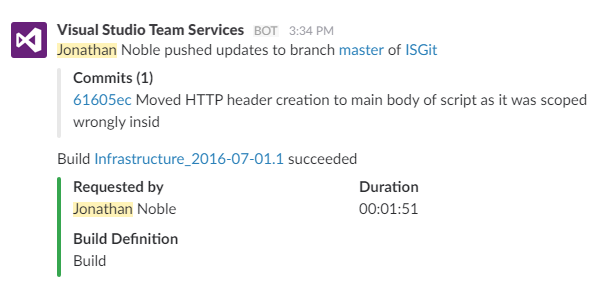

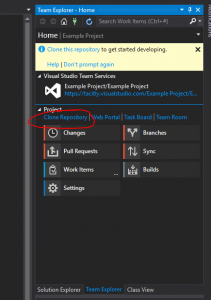

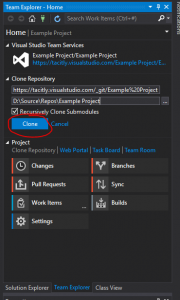

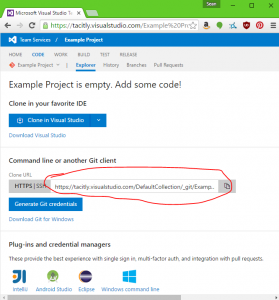

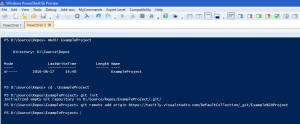

Next up was Michael Greene, who works on Microsoft’s Enterprise Cloud Customer Advisory Team, who gave an excellent talk about using Visual Studio Team Services, PowerShell, and Pester to implement a release pipeline for applications and infrastructure. This was particularly interesting to me, as these are the tools that we’re using in ISG, and I’ve spent the last couple of months trying to do exactly this. Michael was strongly advocating configuring infrastructure as code, which allows the use of proper source control, automated testing, and automated deployment (only if all of the automated tests pass); working in this way has been shown to greatly improve reliability and agility of IT services.

Some excellent further reading on this subject was offered in the form of Microsoft’s whitepaper: The Release Pipeline Model (http://aka.ms/thereleasepipelinemodel) and Steven Murawski’s DevOps Reading List (http://stevenmurawski.com/devops-reading-list/).

Soundbite: If you want to work with Windows Server, the most important technology to learn right now is Pester.

During lunch we had a wander round stalls set up by vendors trying to sell their various DevOps-related products. One that interested me was Squared Up, a configurable dashboard that presents SCOM data (among other things) in a nice, easy to understand manner. I signed up for a free trial, before we discovered that the University already pays for this product. I need to chase this up with our contacts to get myself access to it.

After lunch, the talks split into two streams, so we split up in order to cover more ground. I’ll let Jonathan describe the talks he went to here…

My first afternoon session was with Richard Siddaway, covering Nano Server and Containers. This was really a practical demo following on from Jeffrey’s keynote, stepping through the process of configuring both with the caveat that all of this is pre-release at the moment. It was interesting to note that while Microsoft initially started out by building a PowerShell module to manage containers directly, as a result of feedback they’re re-engineering that to just be a layer on top of Docker, which is the tool that most people use to manage containers today. Another thing that I picked up was that as things stand, there’s no way to patch containers, yet they need to be at the same patch level as the host. The solution is to just blow it away and make a new one, but as was demonstrated, it’s quick and easy to do, so probably the most sensible approach anyway. We need to examine these two technologies carefully over the coming months. Richard mentioned the need to consider version numbering on containers, and which workloads they are suitable for. That’s partly dictated by the workloads that Nano Server will support, which will be limited at launch, but will likely grow reasonably quickly.

Following that, I went to a panel session on technologies, which gave me a shopping list of things to skill up on! The panel agreed that the two most important aspects of the toolchain were Source Control and Build, where the specific tool isn’t important – for Build it just needs to be something that will run scripts, and while it was suggested that any Source Control would be ok, if you didn’t already have something, you should choose Git. On the subject of the most significant tools from the community, Pester and Docker were highlighted. Other things that the panel suggested learning about were JavaScript/Node (although TypeScript is preferable to generic JavaScript), OMS, Linux, and Visual Studio Code. Another couple of interesting points I took from this were that containers don’t remove the problem of configuration management; they just move it, and that Azure Stack would work well for a hybrid model where you would usually host a workload on-premesis, but could burst up to the cloud for particularly busy periods.

…and while he was doing that, I went to a talk by Gael Colas – a Cloud Automation Architect (if anybody is thinking of overhauling our job titles any time soon, I quite like this one) – about configuration management theory.

This was one of my favourite talks of the day – Gael was making the case for short-lived, immutable servers. The general concept is that a server should be built from configuration code or scripts (the exact method is unimportant; what matters is that it’s completely automated), and then never changed at all – no extra configuration, no quick fixes, no patches. When the server needs to be changed (for patches, for example), the source configuration/script should be updated instead, and a new server deployed from that. This method ensures that we always know the exact configuration of a server and we’re always able to rebuild it it identically, every time – this has massive DR and service reliability benefits. This was referred to as Policy Driven Infrastructure. Gael did acknowledge that there are some applications for which this is unsuitable, but they’re rapidly shrinking in number.

The next session I went to was a panel session called DevOps Culture in a Windows World, which mostly turned into people offering advice about how they’ve convinced their organisations to embrace DevOps working practices. You’ll probably see me attempt to use most of the ideas presented over the next few months – this blog post is the start 😉

Two things that I will mention here were the suggestions that it’s important to improve visibility – which I think is something that our department could benefit greatly from – everyone should be able to easily see what everyone else is doing, and should be encouraged to share and help each other (I think we are encouraged to share, but we currently lack the tools to easily do this; I have some ideas about that one but need to work them through) – and the suggestion that we should look at our services like products, and consider their full lifespan when we set them up, instead of thinking of the set up of a service as a project which is completed once the service is up and running, and then left to rot indefinitely.

The last proper talk of the day was given by Peter Mounce of Just Eat, who was discussing how they run their performance testing. Performance is very important to Just Eat, and they work to keep their applications fast by testing their production environment twenty four hours a day. The theory is that running performance tests in QA is meaningless, because it’s impossible to replicate the behaviour of millions of real people using the production application, so they simply pile a load of fake load on their production servers. The fake load increases as real load increases, so that they’re effectively doubling the load on their application all the time – this means that they know that they can take that much load, and they’re able to disable the fake load in case of emergency to handle massive amounts of real load. In general, I’m not sure that the performance testing elements are that applicable to us at this stage, but there was a lovely soundbite which is very applicable to us: Embrace the fact that things are going to break; get better at fixing them quickly.

Finally, everybody came back together for a panel session and discussion, which was interesting, but nothing exceptional to report, then we went for drinks at the expense of Squared Up.