Image by Trang Le from Pixabay

In a world where the demands on medical professionals reach an all-time high, the need for effective communication has never been more crucial. Imagine a scenario where physicians must deliver devastating news to a patient—news that could alter the course of a life, news that might even imply the end of life. I have often wondered how people can deal with that as part of their job. I found out through my first project as a Learning Technologies Developer at the University with the redevelopment of an web service that helps facilitate roleplaynorth (RPN).

RPN provides a crucial role in preparing our MBBS students to deal with those types of scenarios. Through tailored and realistic scenarios they help in the theory of good communication, transitioning customers to be ready to apply these findings in practice.

In the first of a series of 3 posts about the redevelopment of RPN, we’ll look at the following aspects of the project; specifications, system features, and future plans. In this post I will walk you through the specification process.

Specifications: Building the Foundations

Firstly, we looked into what the existing system currently does. Amongst mainly other features it allowed RPN staff to add events and update role players using spreadsheets to manage who was on what event and the role they would play on the event. This information would then be added to the event on system. RPN also had available a separate AccessDB that provided queries required for payroll reports.

Action Function Requirement Document (AFR)

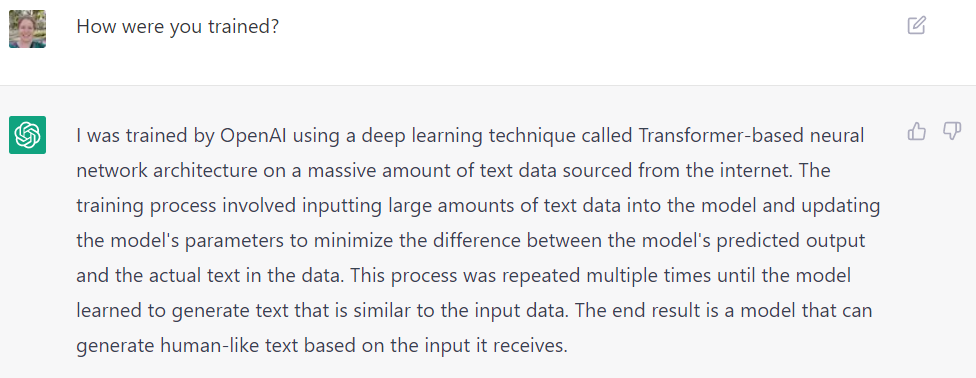

I used a document our team has worked with previously that lists the tasks/actions, function, data requirements to get RPN to think about the whole process and break it down into steps. This document was used to get feedback on how RPN expect the actions to work and gave the opportunity to add tasks if required.

Here’s an example on what that looks like:

| ACTION/TASK | FUNCTION | DATA REQUIREMENTS | NOTES/ FEEDBACK |

|---|---|---|---|

| a) events | Create, Update, Delete Events | Start date, End date, description of event | |

| b) event TAG | Assign Event Tag to event. This can be used to filter the events | Event Tag – Name/Title | |

| c) Add/Remove Role Player – Event | On the events page add a role player to the event | Role Player, Event | Does this need to be automatic or do you want to add them as pending first until they have confirmed participation? |

| d) Scenario | Create, Update, Delete Scenario | Scenario – title, description |

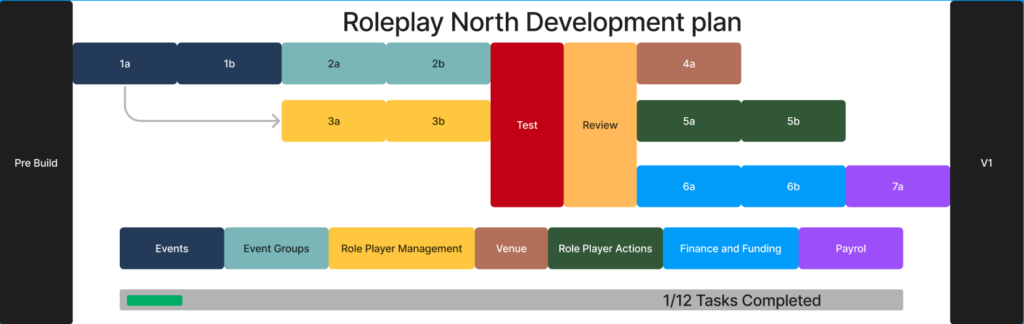

After feedback from RPN, we then converted this document into a more functional focused document. Breaking down actions into tasks allowed us to plan what needed to be developed first, including what actions could be worked on independently or by another members of the team. Here’s what we ended up with.

From this gantt chart you can see that the Events block (1a, 1b) and the Events Group block (2a, 2b) need to be completed before the Venues block (4a) is worked on. You can also see that once 1a is completed someone else can start work on Role Player Management (3a,3b). We also gave time for testing and reviewing what the new system does, giving RPN staff the chance to influence the build and find bugs. We had multiple testing stages one after the Event Groups and Role Player Management blocks were completed, and another back in April after all listed tasks were completed.

The first iteration included all the fundamental features and actions of the website including, user authentication, role based access, basic management of events and customers and communication tools. Nothing too complicated, but essential to the functioning of the website and a good starting point for any future project.

The above image and the AFR document does not show how long each section took – as due to unknown variables like feedback, change in requirements and staff commitments effected the timeframes. However, with a continuous review process we added weight or complexity to these tasks.

You can also see that 1a, 1b is part of the events block by looking at the legend below the branches and you can see what tasks were completed. We also added a cross next to an item once complete to keep us updated on where we were in the build without digging through the code.

We then created wireframes showing how the new system could work. With the AFR, Gantt Chart and the Wireframes we were able to clearly outline what we intended to build for RPN. In my next post I will go through the features.