There has been a lot of discussion in the FMS TEL office about ChatGPT over the past couple of weeks. Michael and Eleanor have spent some time putting this post together to help our readers to learn a bit more about ChatGPT, and to catch up on the discourse around it in HE in general.

We used ChatGPT to generate an about page for the blog last week, which you may have seen. It provides a good example of an introduction for a website. It feels somewhat generic, it has gotten a few things wrong, and expresses itself a little strangely in places. At the same time, it’s a decent starting point to work from.

What is ChatGPT?

ChatGPT is one of many advanced Artificial Intelligence models which has been trained to understand inputs (e.g. questions) in natural language (hence ‘chat’) and answer them. Interacting with ChatGPT feels similar to interacting with a chatbot or virtual assistant on the surface, but the technologies are completely different.

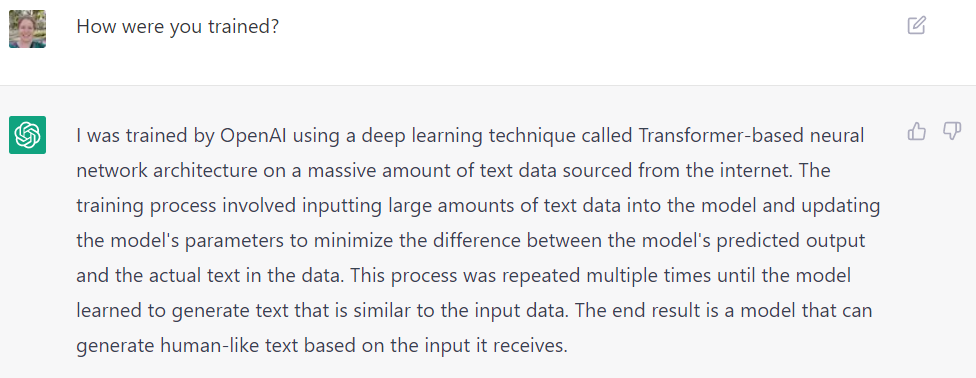

How does Chat GPT work?

The data that ChatGPT draws from is an offline dataset which was created in 2021. The exact content of the dataset is not clear, however ChatGPT is able to formulate responses to a massive range of topics, so it is safe to assume the dataset is enormous, and most likely taken from public internet sites (i.e. not password-protected sites). Part of the training process involved feeding computer code into the model, which helped it learn logic and syntax – something that is present in natural languages in the form of grammar. Feedback was also given to the model to help it improve – this is called ‘supervised learning’.

While ChatGPT can produce extended responses drawing on its huge dataset, it doesn’t understand what it is producing – similar to a parrot repeating after you. It can mimic the sounds but has no true understanding of the meaning.

What are people saying about ChatGPT and University Education?

Assessment Security

With its ability to generate text that looks like a human wrote it, it is natural to be worried that students may use ChatGPT for assessed work. Many automated text editors and translators are already in this market, though they work in a different way. Tools like Word’s spellchecker and Grammarly can both be employed to boost writing skills – though these do not generate text. ChatGPT is different in this respect, and it is free, quick, and easy for anyone to use.

“…The big change is that this technology is wrapped up in a very nice interface where you can interact with it, almost like speaking to another human. So it makes it available to a lot of people.”

Dr Thomas Lancaster, Imperial College London in The Guardian

Assessment security has always been a concern, and as with any new technology, there will be ways we can adapt to its introduction. Some people are already writing apps to detect AI text, and OpenAI themselves are exploring watermarking their AI’s creations. Google Translate has long been a challenge for language teachers with its ability to generate translations, but a practiced eye can spot the deviations from a student’s usual style, or expected skill level.

Within HE, clear principles are already in place around plagiarism, essay mills and other academic misconduct, and institutions are updating their policies all the time. One area in which ChatGPT does not perform well is in the inclusion of references and citations – a cornerstone of academic integrity for many years.

Authentic assessment may be another element of the solution in some disciplines, and many institutions have been doing work in this area for some time, our own included. On the other hand for some disciplines, the ability to write structured text is a key learning outcome and is inherently an authentic way to assess.

Consider ChatGPT’s ability to summarise and change the style of the language it uses.

- Could ChatGPT be used to generate lay summaries of research for participants in clinical trials?

- Would it do as good a job as a clinician?

- How much time could be saved by generating these automatically and then simply tweaking the text to comply with good practice?

The good practice would still need to be taught and assessed, but perhaps this is a process that will become standard practice in the workplace.

Digital skills, critical thinking and accessibility

Prompting AI is certainly a skill in itself – just as knowing what to ask Google to get your required answer. ChatGPT reacts well to certain prompts such as ‘let’s go step by step’ which tells it you’d like a list of steps. You can use that prompt to get a broad view of the task ahead. A clear example of this would be to get a structure for a business plan, or outline what to learn in a given subject. As a tool, ChatGPT can be helpful in collating information and combining it into an easily readable format. This means that time spent searching can instead be spent reading. At the same time, it is important to be conscious of the fact that ChatGPT does not understand what it is writing and does not check its sources for bias, or factual correctness.

ChatGPT can offer help to students with disabilities, or neurodivergent students who may find traditional learning settings more challenging. It can also parse spelling errors and non-standard English to produce its response, and tailor its response to a reading level if prompted correctly.

Conclusions

ChatGPT in its current free-to-use form prompts us to change how we think about many elements of HE. While naturally it creates concerns around assessment security, we have always been able to meet these challenges in the past by applying technical solutions, monitoring grades, and teaching academic integrity. Discussions are already ongoing in every institution on how to continue this work, with authentic assessment coming to the fore as a way of breaking our heavy reliance on the traditional essay.

As a source of student assistance, ChatGPT offers a wealth of tools to help students gather information and access it more easily. It is also a challenge for students’ critical thinking skills, just like the advent the internet or Wikipedia. It is well worth taking the time to familiarise oneself with the technology, and to explore how it may be applied in education, and in students’ future workplaces.

Resources

- Try ChatGPT – you will need to make an account with OpenAI and possibly wait quite a while as the service is very busy.

- Try DALL-E – this AI generates images based on your inputs.

Sources and Further Reading

- University of Kent Webinars, 8th February 2023 (2.5h of content)

- Interview with Sam Altman, CEO of OpenAI (long watch)

- Chat-GPT- is this an alert for changes in the way we deliver education?

- OpenAI’s attempts to watermark AI text hit limits | TechCrunch

- College student claims app can detect essays written by chatbot ChatGPT | Artificial intelligence (AI) | The Guardian

- What implications does ChatGPT have for assessment? | Wonkhe

- Lecturers urged to review assessments in UK amid concerns over new AI tool | Artificial intelligence (AI) | The Guardian

- FE News | Chat-GPT- is this an alert for changes in the way we deliver education?