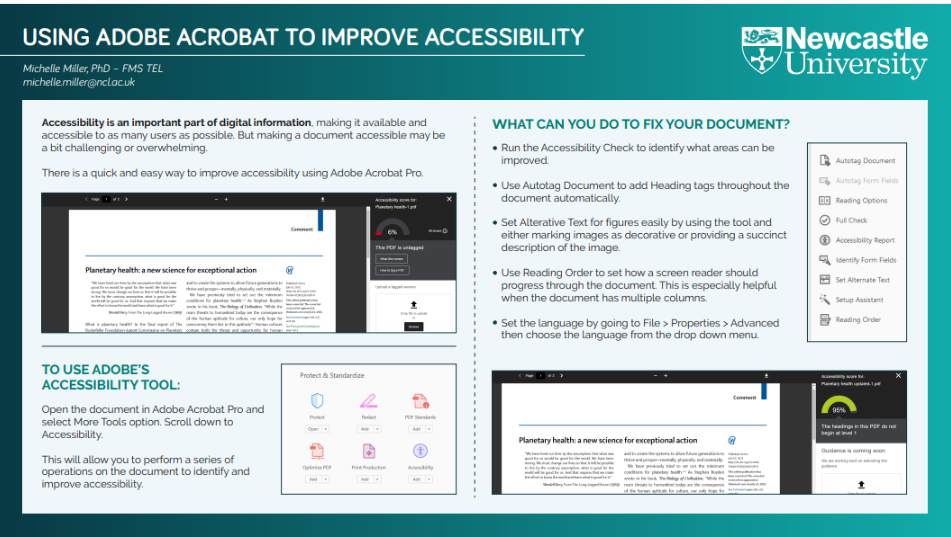

Since joining the University in April 2024, I’ve been fortunate to witness and contribute to growing developments in our Digital Skills provision. While I’m proud of the progress we’ve made, it’s important to acknowledge the strong foundation laid by my predecessor, Dr. Michelle Miller, and the contributions of the Technology-Enhanced Learning team. Their hard work has created a robust infrastructure, which I’ve been able to build upon by adapting and updating existing resources, as well as exploring new opportunities to expand and enhance our offerings.

Currently, I, along with my team of demonstrators, continue to deliver in-person workshops to undergraduate and postgraduate students across the Faculty of Medical Sciences and the Science, Agriculture, and Engineering faculty. I’ve also maintained close collaboration with module leaders and key stakeholders to address the growing demand for digital skills resources among our students. This has led to the creation of new courses, tailored support for specific modules, guidance for postgraduate public talks, and the expansion of our team of postgraduate demonstrators.

Website Revamp

Our website, www.digitalskills.ncl.ac.uk, the central hub for our courses and resources, has recently undergone a visual and functional overhaul. My goal was to make it more accessible, device-friendly, and easier to navigate, ensuring that both internal and external users can access our materials. The website hosts a wealth of resources, including workshop materials and tools to help students and staff enhance their digital literacy.

Key content areas remain, such as:

- Data Analysis

- Document Management

- Poster & Presentation Skills

- Specialist Software

I’ve also introduced new dedicated sections:

- Introductory Series: Offering beginner-friendly materials for various software tools.

- Additional Resources: A curated collection of miscellaneous courses and guidance to support diverse needs.

Additionally, the website continues to host online induction content for multiple university areas. These inductions have been refined to incorporate guidance from governing bodies and share best practices, including Canvas guidance on AI and cybersecurity, software package downloads, and EndNote support. These materials have been sourced with thanks to the Learning and Teaching Development Service, Library Services, and NUIT.

To further showcase the expertise of our team, I’ve also added links to our current postgraduate demonstrators’ current studies and LinkedIn profiles. This not only highlights their contributions but also provides students and staff with opportunities to connect with and learn from their diverse experiences.

New Courses and Collaborations

Through collaboration with various faculty areas, I’ve developed three new courses to meet the evolving needs of our students:

- Excel Foundations: Designed for all skill levels, this modular course covers everything from spreadsheet basics to advanced data analysis tools and creating impactful tables and charts.

- Introduction to BioRender: A beginner-friendly guide to BioRender, tailored for those new to scientific illustration software.

- CMB3000: Project Write-up: A comprehensive course covering IT and library skills essential for Stage 3 Biomedical Sciences dissertations, including specialised EndNote guidance provided by Richard Lumb.

These courses reflect our commitment to equipping students with the digital skills they need to succeed in their academic and professional journeys.

New Recruits in the Student Demonstrator Team

This academic year, I’ve been fortunate to work with an exceptional group of postgraduate student demonstrators. Their expertise and diverse knowledge have been invaluable in supporting workshop attendees, offering context-specific assistance that resonates with students’ needs.

Our returning demonstrators include:

- Douglas Amobi Amoke (Engineering)

- Mien Tran (Gender Studies)

- Brittni Bradford (Cultural Property Protection)

- Jennifer Cooke (Horror Media)

- Chinenye Ekemezie (Biosciences)

We’ve also welcomed new additions to the team:

- Algimantas Beinoravicius (Nutrition)

- Tiancheng Ren (Urban Planning)

- Duong Nguyen (Medicine)

- Maria Brigaoanu (Molecular Biology)

- Nana Yaw Aboagye (Bio-signal and Medical Image Analysis)

Their contributions have greatly enriched our provision. Additionally, I’ve supported departing demonstrators with fellowship claims, references, and other professional development opportunities.

Expanding Our Reach and Looking Ahead

While my team’s primary focus remains within FMS, we’ve continued to deliver digital skills sessions to postgraduate students in SAgE and are exploring opportunities to extend our support across the institution. Looking ahead, we’re excited to expand our offerings, including:

- Enhanced training on SPSS for statistical analysis.

- Digital organisation systems to boost student productivity.

- New resources for Python and other in-demand programming languages.

We’re eager to continue supporting students and staff in developing essential digital skills and look forward to the exciting opportunities ahead. We encourage students and staff to explore our updated resources, attend workshops, and reach out with suggestions on how we can further support digital skills development.

If you’d like to discuss how our team can assist you, please email me at: Elliot.cruikshanks@ncl.ac.uk